Simulation Study to Determine Defaults

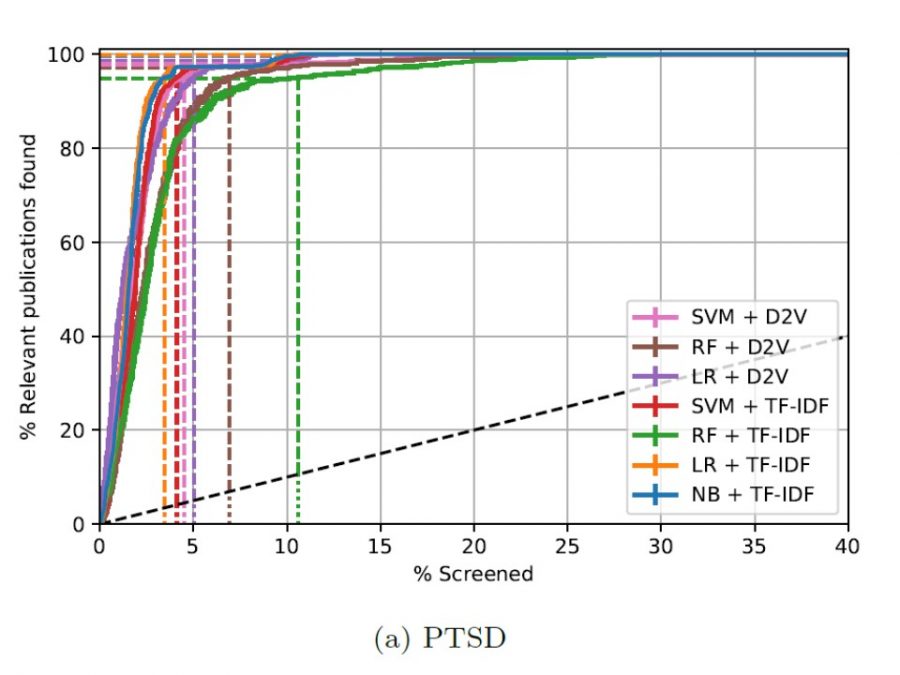

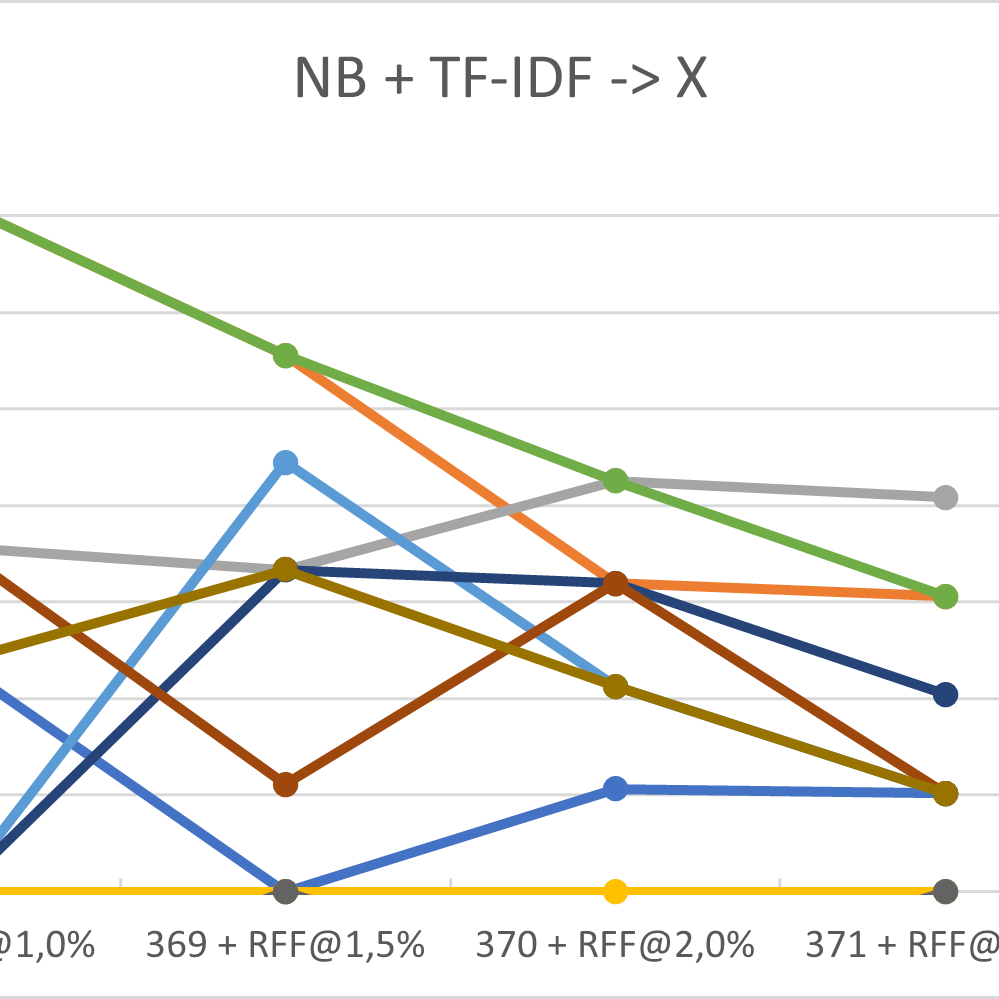

Active learning models were evaluated across four different classification techniques (naive Bayes, logistic regression, support vector machines, and random forest) and two different feature extraction strategies (TF-IDF and doc2vec). Moreover, models were evaluated across six systematic review datasets from various research areas to assess generalizability of active learning models across different research contexts. Performance of the models were assessed by conducting simulations on six systematic review datasets. The models reduced the number of publications needed to screen by 91.7% to 63.9%. Overall, the Naive Bayes + TF-IDF model performed the best.

Ferdinands, G., Schram, R., De Bruin, J., Bagheri, A., Oberski, D. L., Tummers, L., & Van de Schoot, R. (2020, September 16). Active learning for screening prioritization in systematic reviews – A simulation study. https://doi.org/10.31219/osf.io/w6qbg.