Reproducibility and Data storage Checklist for Active Learning-Aided Systematic Reviews

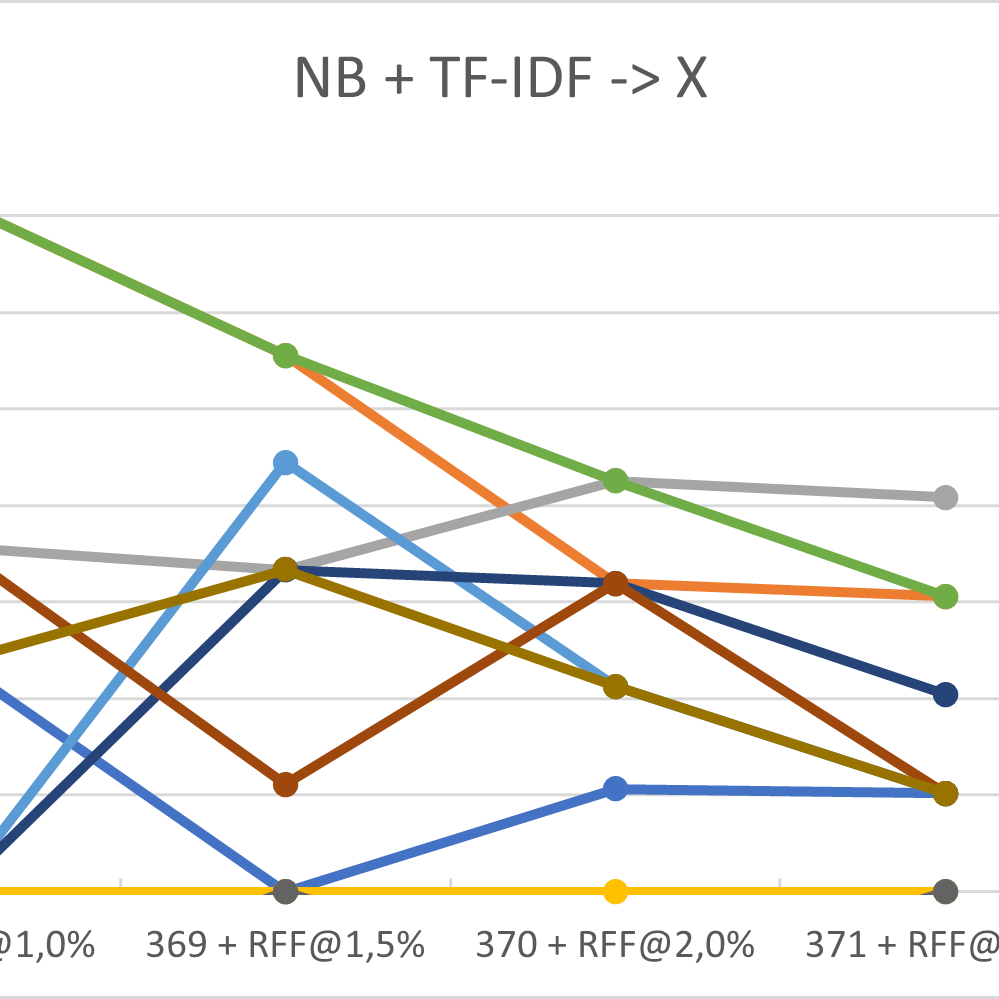

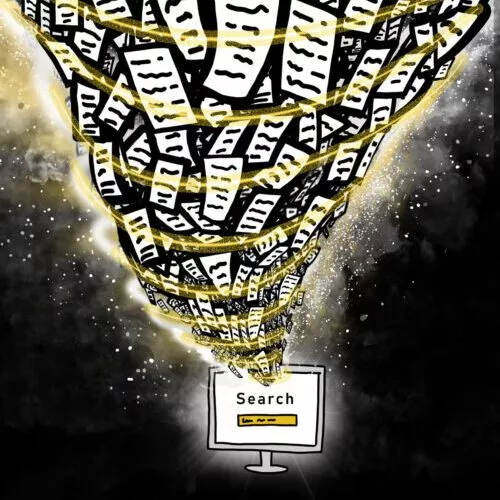

In the screening phase of a systematic review, screening prioritization via active learning effectively reduces the workload. However, the PRISMA guidelines are not sufficient for reporting the screening phase in a reproducible manner. Text screening with active learning is an iterative process, but the labeling decisions and the training of the active learning model can happen independently of each other in time. So it is not trivial to store the data from both events so that you can still know which iteration of the model was used for each labeling decision. Moreover, many iterations of the active learning model will be trained throughout the screening process, producing an enormous amount of data (think of many gigabytes or even terabytes of data), and machine learning models are continually becoming larger. Together this can add up to an undesirable amount of data when naively storing all the data produced at every iteration of the active learning pipeline. This article clarifies the steps in an active learning-aided screening process and what data is produced at every step. We show how this data can be stored efficiently in terms of size. Most notably, the data produced by the model is where we need to strike a balance between reproducibility and storage size. Finally, we created the RDAL-Checklist (Reproducibility and Data storage for Active Learning-aided systematic reviews – checklist) that helps users and creators of active learning software make their screening process reproducible.

Lombaers, P., de Bruin, J., & van de Schoot, R. (2023, January 19). Reproducibility and Data storage Checklist for Active Learning-Aided Systematic Reviews. DOI: 10.31234/osf.io/g93zf

Data generated using ASReview LAB is stored in an ASReview project file. Via the ASReview Python API, there are two ways to access the data in the ASReview (extension .asreview) file: Via the project-API and the state-API. The project API is for retrieving general project settings, the imported dataset, the feature matrix, etc. The state API retrieves data related directly to the reviewing process, such as the labels, the time of labeling, and the classifier used. Go to the documentation for detailed instructions.