The importance of abstracts

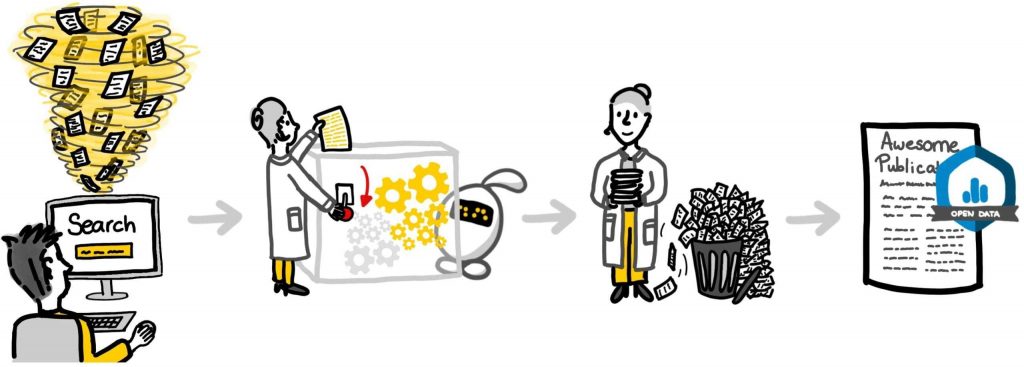

ASReview LAB offers effective, reliable and transparent title-abstract screening. It combines machine (deep) learning models with active learning. The tool can reduce the number of titles you will have to screen by up to 90%. We know that the performance of ASReview LAB increases massively with the available share of publications including abstracts (instead of only the title).

So the question is, how do you retrieve as many abstracts as possible? Jan de Boer, Bianca Kramer and Felix Weijdema, subject librarians at Utrecht University Library offer some tips and tricks

The merit of abstracts

Most databases don’t have the full text available, but just the titles, abstracts, keywords and citation information. You can expect over 95% of the publications in large databases to have abstracts available.

Example #1

“We prepared a dataset for Iris Engelhard on stress and anxiety in the ICU in both patients and health professionals. It contained over 12k publications from both Pubmed and Psycinfo, of which 97,5% had an abstract available of at least 200 characters. Our broad query included papers on physical rather than psychological distress. Excluding these papers in our query could also have resulted in excluding relevant papers on psychological distress in patients suffering from physical distress following a disaster. But the ASReview tool performed well, as Iris experienced:

“The initial search for IC studies yielded about 12,000 abstracts. However, the first 5 referred to physical rather than psychological distress, which may partly account for this large number. The 20 or so abstracts we looked at afterwards no longer referred to physical distress, and did yield psychological distress papers, so the software did a great job then!”

Tips for retrieving abstracts

But retrieving these abstracts from databases is not always easy. To start screening in ASReview LAB, you need to export your search result from one or more databases. Having the abstracts included in the export is not always the default option. And for large sets of titles, it might not even be possible.

After you have exported the results from multiple databases, you will need to deduplicate these results before screening. You will probably use a reference manager like Endnote, Refworks or Zotero to do so. Without going into detail in this deduplication process, you should avoid deleting a duplicate with an abstract.

After you have retrieved as many abstracts as possible, you will still end up with missing abstracts. You can look up publications with missing abstracts simply by sorting the titles by abstract in your reference manager. In some reference managers you will have the option to look for updates and add missing abstracts. The option to update references is available in both Endnote and Mendeley.

Example #2

In the PTSD test dataset, 12.9% of the publications have no abstract (747 publications out of 5782 publications), a relatively large share.

We imported the ris file into Endnote, identified the publications without abstracts and updated the metadata for these publications using the “Find Reference Updates” and updating empty fields only. As a result, we found abstracts for 374 publications. This leaves only 373 publications without an abstract, 6.5% of the data set.

Another option, if you are not using a reference manager, is retrieving abracts by identifier (for instance DOI). For the PTSD test dataset we were able to retrieve 324 abstracts from The Lens, a free to use and open source database of scholarly works and patents.

You can use the ASReview software, no matter if you collect publications by doing combined queries in multiple databases, citation searching, snowball searching or consulting experts. In all cases, ASReview LAB works best if the final result has as many abstracts as possible and as little duplicates as possible.

How to cite ASReview?

Cite our project through this publication in Nature Machine Intelligence. For citing the software, please refer to the specific release of the ASReview software on Zenodo.

Contributing to ASReview

Do you have any ideas or suggestions which could improve ASReview? Create an issue, feature request or report a bug on GitHub! Also take a look at the development fund to help ASReview continue on its journey to easier systematic reviewing.

5 Comments