Seven ways to integrate ASReview in your systematic review workflow

Systematic reviewing using software implementing Active Learning (AL) is relatively new. Many users (and reviewers) have to get familiar with the many different ways how AL can be used in practice. In this blog post, we discuss seven ways meant to inspire users.

- Use ASReview with a single screener.

- Use ASReview with multiple screeners.

- Switch models for hard-to-find papers.

- Add more data because a reviewer asks you to.

- Screen data from a narrow search and apply active learning to a comprehensive search.

- Quality check for incorrectly excluded papers due to screening fatigue.

- Use random reading because you like the looks of the software.

1. Use ASReview with a single screener

Let’s assume you conducted a systematic search in multiple databases, the records you have found in the different databases were merged into one dataset, the data was de-duplicated, and as many abstracts as possible were retrieved (why is this so important?). You found 10,000 potentially relevant records, and you want to screen the records based on predefined inclusion/exclusion criteria. You also have chosen a stopping rule.

You already know about ten records that you already know are relevant (for example, from asking experts in the field). To warm up the model, you can use five records as prior knowledge, while the other five will be used as validation records to check if the software can find these records. After selecting the ten randomly chosen irrelevant records and deciding on the active learning model, you can start screening until you reach your stopping criterium. The goal is to screen fewer records than exist in your dataset, and simulation studies have shown you can skip up to 95% of the records (e.g., Van de Schoot et al., 2022), but this highly depends on your dataset and inclusion/exclusion criteria (e.g., Harmsen et al., 2022). After deciding to stop screening, you can export the results (i.e., the partly labeled data and the project file containing the technical information to reproduce the entire process) and publish them on, for example, the Open Science Framework. As a final step, you can mark the project as finished in ASReview.

2. Use ASReview with multiple screeners

Again, let’s assume you found 10,000 potentially relevant records, and you want to screen the records based on predefined inclusion/exclusion criteria using multiple screeners, let’s say two researchers. Then there are numerous options:

Option 1

Both researchers install the software locally, upload the same data and select identical records as prior knowledge. Both researchers train the same active learning model and independently start screening records for relevance. After both researchers are done screening- because each fulfills the pre-specified stopping criterium, the results, containing the labeling decisions and the ranking of the unseen records, are exported as a RIS, CSV, or XLSX file. Both files can be merged in, for example, Excel or R). Now, both screeners can discuss differences in labeling decisions and compute the similarity (e.g., Kappa), just like with a classical PRISMA-based reviewing. The only difference is that there might be records only seen by one of the two researchers or records not seen at all.

Tip: One screener can set up the project and export the project file. The second screener can import the project file instead of setting up a new project. This will make sure that both screeners start with the same priors and project setup.

Option 2

Both screeners use the same data, but they use a different set of records as prior knowledge. After screening until the stopping criterium has been reached, both screeners merge the results to check if the same relevant papers have been found independent of the initial training set.

A similar procedure can be applied for different settings for the active learning model (e.g., use two other feature extractors or classifiers) to check if they find the same set of relevant independent of the chosen model.

Option 3

Research A starts with screening, and Reviewer B takes over if the screening time for A is done. If B is done, then A takes over again. You can export the project file and share it with a colleague who can import the file in ASReview to continue screening where the first researchers stopped (officially supported). Alternatively, ASReview can be put on a server (not officially supported but successfully implemented by some users).

Tip: Collaborate with researchers in different time zones, so screening can continue 24 hours per day!

3. Switch models for hard-to-find papers

Based on a simulation study by Teijema et al. (2022), we strongly advise switching to a different model after reaching your predefined stopping criterium. Especially switching from a simple and fast model (e.g., TF-IDF with NB or logistic regression) to a more advanced and computational intense model (e.g., doc2vec or sBert with a neural network or even a custom model designed specifically for your data added via a template) can be beneficial in finding records that are more difficult to be identified by the simpler models. Be aware of the longer training time of advanced feature extraction techniques during the warm-up phase; see Table 1 of Teijema et al. for an indication of the time you need to wait before the first iteration is done training.

Procedure:

- Start Screening round 1.

- Use the information on the analytics page to determine whether you reached your stopping criterium.

- Export your data and project file.

- Start a new project for screening round 2.

- Upload your partly labeled dataset; the labels of your first round of screening will be automatically recognized as prior knowledge.

- Select a different model than you used on screening round 1.

- Screen for another round until you reach your stopping criterium again.

- Export your data and the project file.

- Mark your project as finished.

- Make both the project file and dataset for both screening rounds available, for example, the Open Science Framework.

The Mega Meta project is an example where we applied the procedure mentioned above. The team first screened a dataset containing >165K records using TF-IDF and logistic regression. After reaching the stopping criterium, the labeling decisions of the first round were used to optimize the hyperparameters of a neural network. Using the optimized hyperparameters, a 17-layer CNN model was used as a model for the partly-labeled data of the first round. The output was screened for another round until the stopping criterium was reached. 290 extra papers were screened, and 24 additional relevant papers were found. Their workflow is described in Brouwer et al. (2022).

4. Add more data because a reviewer asks you to

Working on a systematic review project (or meta-analysis) is a long process and by the time reviewers are reading your paper, your literature search is outdated. Often it is Reviewer #2 who asks you to update your search 🙁

A way to deal with this request is to re-run your search and add the newly found and unlabeled records to your labeled dataset. Make sure to have a column in the dataset containing the labels (`0` for excluded papers and `1` for the included papers, and leave blank for the new records). Import the file into ASReview and the labeled records will be detected as prior knowledge.

Tip: When using reference managing software the labels can be stored in the N1 field.

For example, Berk et al. (2022) performed a classical search and screening process, identifying 638 records, of which 18 were deemed relevant. Then, the search was updated after 6 months. The labels of the first search were used as training data for the second search. Then, the 53 records were screened using ASReview, and seven additional relevant records were identified.

5. Screen data from a narrow search and apply active learning to a comprehensive search

Since a single literature search can easily result in thousands of publications that have to be read and screened for relevance, literature screening is time-consuming. As truly relevant papers are very sparse (often <5%) this is an extremely imbalanced data problem. When answering a research question is urgent, as, with the COVID-19 crisis, it is even more challenging to provide a review that is both fast and comprehensive. Therefore, scholars often develop narrower searches, however, this increases the risk of missing relevant studies.

Option 1

To avoid having to make harsh decisions in the search phase, you could spend an equal amount of screening time starting with a much larger dataset. That is, you can broaden the search query and identify many more papers than you would have been willing to screen with a more narrow search. Since you always screen the most likely relevant paper as predicted by the model, you will screen the most likely relevant papers in the larger dataset.

Option 2

You could first perform a classical search and use all the decisions made to train a model for a larger dataset found with a broader search.

For example, Mohseni et al. (2022) broadened the search terms after an initial search strategy. The original search identified 996 articles, of which 93 were deemed relevant after the manual screening. The broader search yielded 3477 records, and after screening the first 996 abstracts with ASReview they found 28 additional relevant abstracts of which 3 met the final full-text inclusion criteria, which were otherwise missed using the standard search strategy.

Similarly, Savas et al. (2022) screened papers from 2010 and onward, which yielded 1155 records, of which 30 were deemed relevant. Subsequently, a new search was performed, including papers published before 2010. This search yielded an additional 4.305 records. The labels of the screening phase of the first search were used as training data for the second search and five papers appeared to be found to meet the inclusion criteria.

6. Quality check for excluded records

Due to screening fatigue, you might have accidentally excluded a relevant paper. To check for such incorrectly excluded but relevant papers, you can ask a second screener to screen your excluded papers using the relevant records as training data.

Procedure:

- Screener A finishes the screening process (with or without using active learning / ASReview).

- In reference manager software (or excel), select the included and excluded records, but remove all unseen records from the data. Add a column with the label `1` for the relevant records. Select 10 records you are sure should be excluded and add the label `0`. Remove any labels for the other excluded records you want to check.

- Decide on a stopping rule.

- Start a project in ASReview, import the partly labeled dataset (the prior knowledge will be automatically detected), and train a model.

- Ask a second screener to screen the data (maybe just for 1-2 hours). This person will screen the initially excluded records but rank-ordered based on relevance scores.

- After the stopping criterium has been reached, mark the project as finished and export the data and project file.

In Brouwer et al. (2022) this procedure was used, and, in total, 388 labels originally determined as irrelevant and predicted by the machine learning model as most likely relevant were assessed by a topic expert, and 95 labels were converted back to relevant

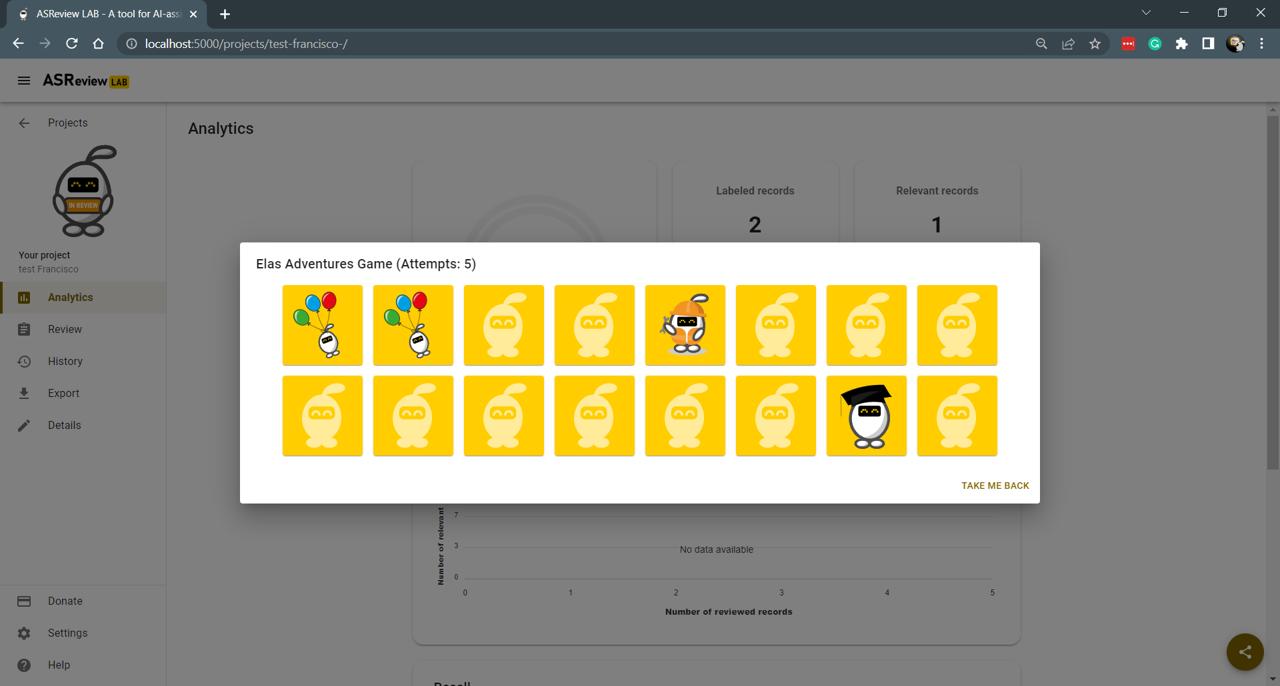

7. Use random reading because you like the looks of the software

Maybe you don’t want to use active learning, but you do want to use the software because it looks great! Or, because there is a hidden gaming mode… No worries, you can always select random as the query strategy.