Active Learning Explained

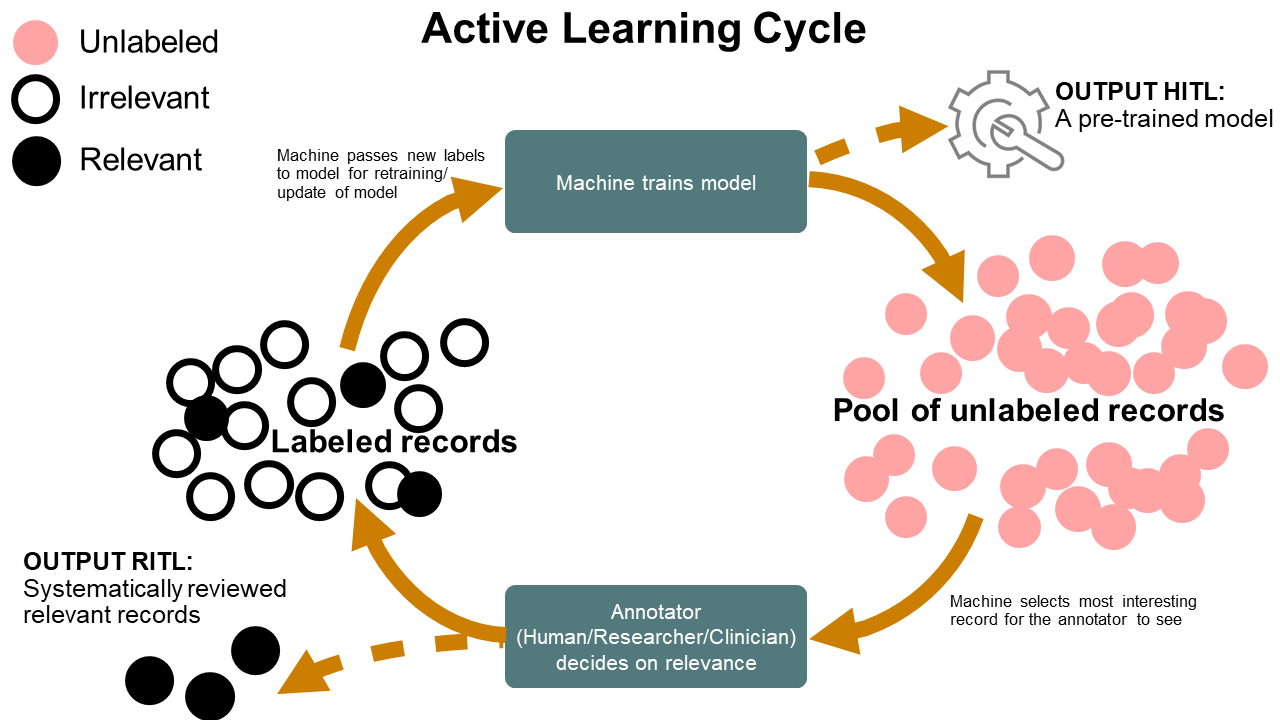

The rapidly evolving field of artificial intelligence (AI) has allowed the development of AI-aided pipelines that assist in finding relevant texts for such search tasks[1]. A well-established approach to increasing the efficiency of screening large amounts of textual data is screening prioritization[2, 3] with active learning[4]. Screening prioritization re-arranges the records to be screened from random to a more intelligent order. Active learning denotes the scenario in which the reviewer is labeling specific records that are selected by a machine learning model. The machine learning model learns from the reviewers’ decisions and uses this knowledge to select the next record presented to the reviewer. The annotated dataset starts out small and iteratively grows in size. Active learning for systematic reviewing is very effective for systematic reviewing and can save up to 95% of screening time[5-15].

Classical Pipeline

In a classical systematic review, you start with the set of unlabeled records (e.g., meta-data containing titles and abstracts of scientific papers or newspaper articles, emails, policy documents, or any type of textual data) retrieved from a systematic search. After merging the results from different databases, abstract retrieval, and de-duplication, the data contains a pool of unlabeled records. A classical systematic screening process would involve randomly screening all the records for relevance. Assuming the records contain meta-data (e.g., titles and abstracts) after all the records have been screened, full texts are read and screened for relevance.

Since a single literature search can easily result in thousands of records that have to be read and screened for relevance, systematic literature screening is time-consuming. An experienced reviewer can screen an average of two abstracts per minute; for less experienced reviewers, it can take up to 7 minutes per reference[12]. As truly relevant papers are very sparse (often <5%), this is an extremely imbalanced data problem. When answering a research question is urgent, as, with the current COVID-19 crisis, it is even more challenging to provide a review that is both comprehensive. Therefore, scholars often develop narrower searches, with the risk of missing relevant studies. However, even with narrow searches, the number of papers that require manual screening by highly skilled screeners is still exceeding available time and resources by far. Also, this practice often yields unacceptable numbers of false negatives (wrongly excluded papers) due to screening fatigue. Moreover, we, humans, tend to make mistakes, especially when our time is spent on repetitive tasks and we typically fail to identify about 10% of the relevant papers[19].

AI-aided Pipeline

AI-aided systematic reviewing works as follows: Just like with a classical pipeline, you start with the set of all unlabeled records retrieved from a search. This step is followed by constructing a training set consisting of labeled records provided by the annotator. At least one relevant record and one irrelevant record are needed to train the first iteration of the active learning model. More training data may result in better performance, but simulation studies show already excellent performance with a training set of only 1+1 record. Partly labeled data, where previously labeled data is combined with new unlabeled data, can also be used.

The next step is feature extraction. That is, an algorithm cannot make predictions from the records as they are; their textual content needs to be represented more abstractly. The algorithm can then determine the important features necessary to classify a record, thereby drastically decreasing the search space. Many different feature extractors are available, including multi-language ones.

Then, the active learning cycle starts:

1. A classification algorithm (i.e., machine learning model) is trained on the labeled records using a balancing strategy to deal with the unbalancedness of the data, and relevance scores are estimated for all unlabeled records.

2. ELAS, your Electronic Learning Assistant, chooses a record to show (query strategy);

3. The annotator screens this record and provides a label, relevant or irrelevant. The newly labeled record is moved to the training data set, and the cycle starts again.

RITL and HITL

The interaction with the human can be used to train a model with a minimum number of labeling tasks. The trained model is considered the main output which is then used to classify new data, also called Human-in-the-Loop machine learning[16]. In the general sense, the key idea behind active learning is that, if you allow the model to decide for itself which data it wants to learn from, its performance and accuracy may improve and it requires fewer training instances to do so. Moreover, the dataset’s informativeness is increased by having the reviewer annotate those more informative references to the model; this method is called uncertainty-based sampling. For HITL applications the active learning cycle is repeated until the model performs best.

The application of active learning to systematic reviewing is called Researcher-In-The-Loop (RITL)[17] with three unique components:

(I) The primary output of the process is a selection of the pool with only relevant papers;

(II) All data points in the relevant selection should have been seen by a human at the end of the process (certainty-based sampling);

(III) The process requires a strong, systematic way of working so that it ensures (more or less) that all likely relevant publications are found in a standardized way based on pre-defined eligibility criteria.

For RITL applications the active learning cycle is repeated until the annotator has seen all relevant records (keeping in mind humans fail to find about 10% of the relevant records). The goal is to save time by screening fewer records than exist in the entire pool in interaction with the machine, which becomes ‘smarter’ after each labeling decision.

How to Stop?

The model incrementally improves its predictions on the remaining unlabeled records in the active learning cycle. The cycle continues until all records have been labeled, or if the user stops with labeling. With RITL, the goal is that all relevant records are identified as early in the process as possible. The reviewer decides to stop at some point during the process to conserve resources or when all records have been labeled. In the latter case, no time was saved and therefore the main question is to decide when to stop: i.e. to determine the point at which the cost of labeling more records by the reviewer is greater than the cost of the errors made by the current model[18]. Finding 100% of the relevant papers appears to be almost impossible, even for human annotators[19]. Therefore, we typically aim to find 95% of the inclusions. However, in the situation of an unlabeled dataset, you don’t know how many relevant papers there are left to be found. So researchers might either stop too early and potentially miss many relevant papers, or stop too late, causing unnecessary further reading[20]. That is, one can decide to stop reviewing after a certain amount of non-relevant papers have been found in succession[21], but this is up to the user to decide.

There are potential stopping rules which can be implemented, estimating the number of potentially relevant papers or finding an inflection point as discussed in [27-29; 31, 32; 34, 35, 37,39].

Another option is to use heuristics [25,30,33], for example:

Time-based strategy: If you choose a time-based strategy, you decide to stop after an X amount of time. This strategy can be useful when you have a limited amount of time to screen.

Data-driven strategy: When using a data-driven strategy, you e.g. decide to stop after a Y amount of consecutive irrelevant papers. Whether you choose 50, 100, 250, 500, etc. is dependent on the size of the dataset and the goal of the user. You can ask yourself: how important is it to find all the relevant papers?

Mixed strategy: Another option is to stop after an x amount of time unless you exceed the predetermined threshold of consecutive irrelevant papers before that time.

Switching: A strategy we highly recommend is, after reaching the stopping rule, to switch to a different Active Learning model and screen for another round of records. Switching to a different model, especially a different feature extractor, will re-order the records based on a different vocabulary and might help to find slightly different records. Also, after many iterations, neural networks might be useful. Such models require much more training data but might be useful to detect records that are different in vocabulary but similar in semantic meaning due to concept ambiguity, the different angles from which a subject can be studied, and changes in the meaning of a concept over time.

Even more options and considerations can be found on the discussion platform.

Non-exhaustive List of References

- Harrison, H., et al., Software tools to support title and abstract screening for systematic reviews in healthcare: an evaluation. BMC Medical Research Methodology, 2020. 20(1): p. 7.

- Cohen, A.M., K. Ambert, and M. McDonagh, Cross-Topic Learning for Work Prioritization in Systematic Review Creation and Update. J Am Med Inform Assoc, 2009. 16(5): p. 690-704.

- Shemilt, I., et al., Pinpointing Needles in Giant Haystacks: Use of Text Mining to Reduce Impractical Screening Workload in Extremely Large Scoping Reviews. Res. Synth. Methods, 2014. 5(1): p. 31-49.

- Settles, B., Active Learning. Synthesis Lectures on Artificial Intelligence and Machine Learning, 2012. 6(1): p. 1-114.

- Yu, Z. and T. Menzies, FAST2: An intelligent assistant for finding relevant papers. Expert Systems with Applications, 2019. 120: p. 57-71.

- Yu, Z., N.A. Kraft, and T. Menzies, Finding Better Active Learners for Faster Literature Reviews. Empir. Softw. Eng., 2018. 23(6): p. 3161-3186.

- Miwa, M., et al., Reducing Systematic Review Workload through Certainty-Based Screening. J Biomed Inform, 2014. 51: p. 242-253.

- Cormack, G.V. and M.R. Grossman, Engineering quality and reliability in technology-assisted review. Proceedings of the 39th International ACM SIGIR conference on Research and Development in Information Retrieval, 2016: p. 75-84.

- Cormack, G.V. and M.R. Grossman, Autonomy and Reliability of Continuous Active Learning for Technology-Assisted Review. 2015.

- Wallace, B.C., C.E. Brodley, and T.A. Trikalinos, Active learning for biomedical citation screening. Proceedings of the 16th ACM SIGKDD international conference on Knowledge discovery and data mining, 2010: p. 173-182.

- Wallace, B.C., et al., Deploying an interactive machine learning system in an evidence-based practice center: abstrackr. Proceedings of the ACM International Health Informatics Symposium (IHI), 2012: p. 819-824.

- Wallace, B.C., et al., Semi-Automated Screening of Biomedical Citations for Systematic Reviews. BMC Bioinform, 2010. 11(1): p. 55-55.

- Gates, A., et al., Performance and Usability of Machine Learning for Screening in Systematic Reviews: A Comparative Evaluation of Three Tools. Systematic Reviews, 2019. 8(1): p. 278-278.

- Gates, A., C. Johnson, and L. Hartling, Technology-assisted title and abstract screening for systematic reviews: a retrospective evaluation of the Abstrackr machine learning tool. Systematic Reviews, 2018. 7(1): p. 45.

- Singh, G., J. Thomas, and J. Shawe-Taylor, Improving Active Learning in Systematic Reviews. 2018.

- Holzinger, A., Interactive Machine Learning for Health Informatics: When Do We Need the Human-in-the-Loop? Brain Inf., 2016. 3(2): p. 119-131.

- Van de Schoot, R. and J. De Bruin Researcher-in-the-loop for systematic reviewing of text databases. 2020. DOI: 10.5281/zenodo.4013207.

- Cohen, A.M., Performance of Support-Vector-Machine-Based Classification on 15 Systematic Review Topics Evaluated with the WSS@95 Measure. J Am Med Inform Assoc, 2011. 18(1): p. 104-104.

- Wang, Z., et al., Error rates of human reviewers during abstract screening in systematic reviews. PloS one, 2020. 15(1): p. e0227742.

- Yu, Z., N. Kraft, and T. Menzies, Finding better active learners for faster literature reviews. Empirical Software Engineering, 2018.

- Ros, R., E. Bjarnason, and P. Runeson. A machine learning approach for semi-automated search and selection in literature studies. in Proceedings of the 21st International Conference on Evaluation and Assessment in Software Engineering. 2017.

- Webster, A.J. and R. Kemp, Estimating omissions from searches. The American Statistician, 2013. 67(2): p. 82-89.

- Stelfox, H.T., et al., Capture-mark-recapture to estimate the number of missed articles for systematic reviews in surgery. The American Journal of Surgery, 2013. 206(3): p. 439-440.

- Kastner, M., et al., The capture–mark–recapture technique can be used as a stopping rule when searching in systematic reviews. Journal of clinical epidemiology, 2009. 62(2): p. 149-157.

- Bloodgood, M., & Vijay-Shanker, K. (2014). A method for stopping active learning based on stabilizing predictions and the need for user-adjustable stopping. arXiv preprint arXiv:1409.5165.

- Cohen, A. M. (2011). Performance of Support-Vector-Machine-Based Classification on 15 Systematic Review Topics Evaluated with the WSS@95 Measure [10/cskz4h]. J Am Med Inform Assoc, 18(1), 104-104. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3005879/

- Cormack, G. V., & Grossman, M. R. (2015). Autonomy and Reliability of Continuous Active Learning for Technology-Assisted Review. http://arxiv.org/abs/1504.06868

- Cormack, G. V., & Grossman, M. R. (2016). Engineering quality and reliability in technology-assisted review. Proceedings of the 39th International ACM SIGIR conference on Research and Development in Information Retrieval, 75-84.

- Kastner, M., Straus, S. E., McKibbon, K. A., & Goldsmith, C. H. (2009). The capture–mark–recapture technique can be used as a stopping rule when searching in systematic reviews. Journal of clinical epidemiology, 62(2), 149-157.

- Olsson, F., & Tomanek, K. (2009). An intrinsic stopping criterion for committee-based active learning. Proceedings of the Thirteenth Conference on Computational Natural Language Learning (CoNLL),

- Ros, R., Bjarnason, E., & Runeson, P. (2017). A machine learning approach for semi-automated search and selection in literature studies. Proceedings of the 21st International Conference on Evaluation and Assessment in Software Engineering,

- Stelfox, H. T., Foster, G., Niven, D., Kirkpatrick, A. W., & Goldsmith, C. H. (2013). Capture-mark-recapture to estimate the number of missed articles for systematic reviews in surgery. The American Journal of Surgery, 206(3), 439-440.

- Vlachos, A. (2008). A stopping criterion for active learning. Computer Speech & Language, 22(3), 295-312.

- Wallace, B. C., Small, K., Brodley, C. E., Lau, J., & Trikalinos, T. A. (2012). Deploying an interactive machine learning system in an evidence-based practice center: abstrackr. Proceedings of the ACM International Health Informatics Symposium (IHI), 819-824.

- Wallace, B. C., Trikalinos, T. A., Lau, J., Brodley, C., & Schmid, C. H. (2010). Semi-Automated Screening of Biomedical Citations for Systematic Reviews [https://doi.org/10.1186/1471-2105-11-55]. BMC Bioinform, 11(1), 55-55. https://doi.org/10.1186/1471-2105-11-55

- Wang, Z., Nayfeh, T., Tetzlaff, J., O’Blenis, P., & Murad, M. H. (2020). Error rates of human reviewers during abstract screening in systematic reviews. PLoS ONE, 15(1), e0227742.

- Webster, A. J., & Kemp, R. (2013). Estimating omissions from searches. The American Statistician, 67(2), 82-89.

- Yu, Z., Kraft, N., & Menzies, T. (2018). Finding better active learners for faster literature reviews. Empirical Software Engineering. https://doi.org/10.1007/s10664-017-9587-0

- Yu, Z., & Menzies, T. (2019). FAST2: An intelligent assistant for finding relevant papers. Expert Systems with Applications, 120, 57-71.