Starting a systematic review can feel like navigating through a maze, with countless articles and endless decisions. Enter ASReview – your trusty AI tool. ASReview isn’t just a one-trick pony; it’s more like a Swiss Army knife. Equipped with a variety of models, designed to tackle every different type of dataset.

In this blog post, we’re diving into these models. Every model has their special powers and quirks. From the classic predictability of traditional classifiers to the complex power of neural networks, we’ll explore how each model can turn the task of literature screening into your own optimized research plan. Whether you’re a seasoned researcher or just dipping your toes into the ocean of systematic reviews, join us through the powerful and diverse models available in ASReview.

Models, what are those?

ASReview is a tool heavily dependent on machine learning algorithms. When you begin a new systematic review in ASReview, you have the opportunity to select which models you will use. ASReview, with its strong academic roots, emphasizes the values of openness and fairness. And being scientists, we don’t limit you to a model we think is best for you; instead, we give you the freedom to choose. After all, you are the Oracle. But with this freedom comes a question: which combination of models will you select for your review?

Interested in comparing other options? Compare functionality, open sources, and model choice freedom. Evaluate all available Active Learning-aided screening tools.

ASReview uses Active Learning at its core. Active learning is a cycle in which you and an AI take turns doing what they do best, to finish the screening phase of a systematic review as efficiently as possible. What you do better than anyone or anything, is the labeling of literature for your study. What AI does best, is efficiently and quickly going through tons and tons of data to provide for you the critical piece of data to be labeled. Together, you make an incredible team! Want to know more about how active learning works? This blog post explains it in great detail.

So what exactly is AI? AI is a broad term, but we will let you in on a little secret. Listen carefully…

AI is just statistics on a large scale!

Don’t tell anyone, though! By keeping this in mind, AI goes from being magic to something that can be understood. And that’s exactly what we will do in this blog post. Explain small elements that together make up the powerful AI pipeline.

The ASReview active learning pipeline is made up of five elements:

- A Feature Extractor algorithm

- A Classification algorithm

- A Query Strategy

- A Balancing strategy

- A stopping rule

These elements are algorithms. Give an algorithm some input, and in return it will give you some output based on the rules of the algorithm. By stringing these algorithms together, we create an AI pipeline that will help you in your systematic review screening. In this blog post, we will touch on feature extractors and classifiers.

Feature Extractors

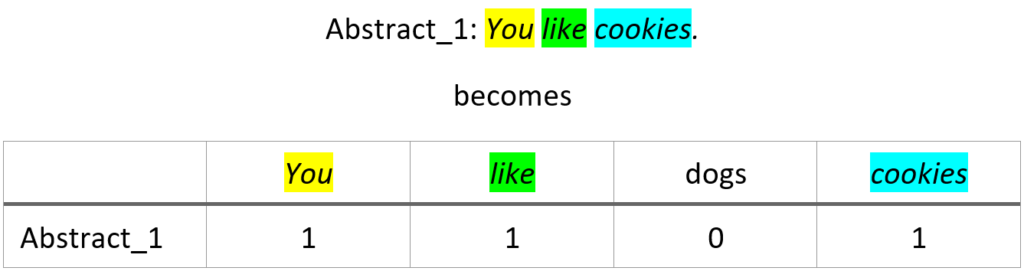

Machines do not understand text in the way humans do. Machines are adept at processing numbers, but not so much at processing words or sentences. This limitation holds even for advanced models like the newest GPT models, which despite their seemingly humanlike interaction, convert text into numerical representations before analyzing what it says. And since ASReview runs on a machine, we want to represent the text as numbers. Many rows of numbers. One row of numbers for each abstract, and we do this using FEATURE EXTRACTORS.

Feature extractors are a crucial component in the field of machine learning. At its core, a feature extractor is a tool that transforms text data into a numerical format, a process often referred to as vectorization. It takes features from the text and extracts them. The result is a vector, a row of numbers in a table.

What are features then? Features are types of information. It can be the frequency of a word in a text, but also the location of a word in a sentence (position). And it can even be more complex, a feature can be the semantic meaning of a word, or the words a word references to (attention). Any piece of information we can derive from an abstract using algorithms can be coded as a feature.

In the context of ASReview, feature extractors enable machine learning models to process and analyze vast amounts of text. From the features extracted from text, models can identify patterns, trends, and correlations, which might be indicative of the relevance or irrelevance of an article to a particular systematic review.

ASReview has different feature extractors available for you to select. Below is an (often simplified) overview of options, together with PROS and CONS for each model.

TF-IDF

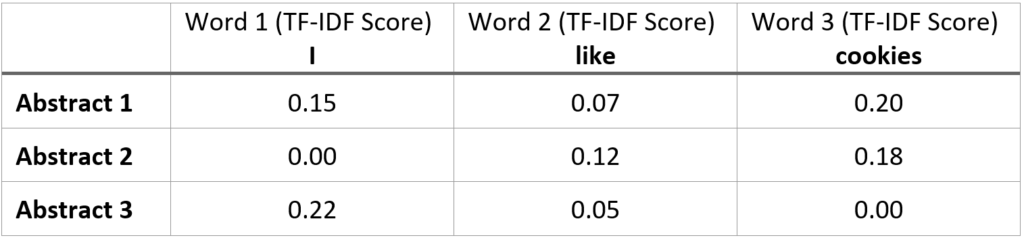

TF-IDF, short for Term Frequency-Inverse Document Frequency, is a feature extraction technique used in text mining and information retrieval. It is a feature extractor available in ASReview. It operates on two key concepts:

- Term Frequency (TF): This measures how frequently a term occurs in your abstract.

- Inverse Document Frequency (IDF): This measures how often the term appears across all of your abstracts. IDF is calculated by dividing the total number of documents by the number of documents containing the term.

The TF-IDF value is obtained by multiplying these two figures. This results in a numeric value for a word, signifying its importance in an abstract relative to your dataset. By doing this for every word in every abstract, we effectively transform text into numbers, with each column representing a specific word and its corresponding TF-IDF score and each row representing an abstract, allowing for subsequent machine learning analysis.

PROS:

- Lightweight: TF-IDF has near-instant computation.

- Compatibility: TF-IDF produces no negative values, and will work with Naïve Bayes (Naïve Bayes? You’ll read about NB in the classifiers section!), while other feature extractors won’t.

- Simple: Good in datasets with consistent terms for classification.

- Interpretability: TF-IDF is easily interpretable when evaluating the feature matrix.

CONS:

- Loss of word order and context: TF-IDF does not incorporate the order of words and the context words are in into its representation. This can lead to a loss of meaning, especially in documents where phrasing and context are important. Without this information, do you know the difference between “not really” and “not really”? How about the difference between “bark” and “bark”?

- Ignores Semantics: TF-IDF doesn’t put different words with the same meaning together. It is thus up to the classifier to reconnect similar words. Identical and indistinguishable are very different words for TF-IDF. Yet they are identical/indistinguishable for us.

What do the vectors look like for TF-IDF? Here, each column is a different word. The final table will have one column for each word found across all abstracts. Each value is calculated as (Term Frequency / Document Frequency).

Doc2Vec

Compared to TF-IDF, ASReview’s Doc2Vec model is a lot more complex. Doc2Vec is a simple form of neural network. This means that instead of using an algorithm made by a human to perform its logic, it has learned its logic by training on data first, and uses this learned logic to perform its task. Doc2vec learns this logic in three steps:

- During the initial setup, each document in your dataset is randomly assigned a vector. These vectors are not meaningful yet.

- Then, the model is trained. Training works as follows: the model reads a few words from an abstract and tries to predict the next word. It does this by using the vectors of the surrounding words and the vector assigned to this specific document. The prediction is a probability distribution over all words in the vocabulary – essentially, a guess at which word comes next.

- If the prediction is wrong, the model adjusts the vectors slightly, using a method called backpropagation. Over many iterations, the predictions get better and better, as the vectors start capturing the essence of the words and documents – their meaning, usage, and context.

- After sufficient training, the vectors stabilize. They don’t change much anymore because the model has become good at prediction. These trained vectors are the output of your doc2vec model, which you will use for your classification.

Neural networks. The term neural network is indeed derived from the neurons one finds in our brains. However, they work, learn, and function quite differently. A neural network is modeled after a brain like an airplane is modeled after a bird. The metaphor only works up to a point. The machine learning implementation of the brain has diverged significantly from how the brain works.

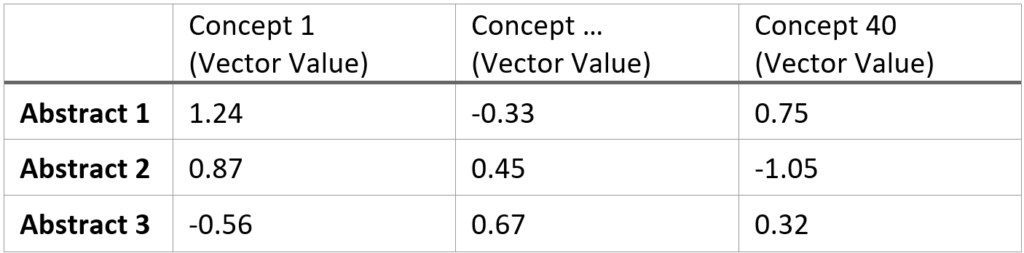

The great thing about learning how a word is used in language is that we learn how similar words are used. And on top of that, it also learns in what context a word is used. Doc2Vec learns the meaning of words in a text by observing how they appear in relation to other words, essentially learning the associations and patterns from their usage. By doing this repeatedly across many contexts and documents, the model builds a multi-dimensional space where words with similar contexts and meanings are positioned closer to each other. And what is a multi-dimensional space? It’s just rows and columns of numbers! The rows represent the abstracts, and the columns represent dimensions of meaning.

Not One Meaning Per Dimension: In the multi-dimensional space of Doc2Vec, each dimension does NOT correspond to an INTERPRETABLE meaning or concept. Instead, each dimension represents a feature learned from the text data. Sadly, these features are often abstract and not easily interpretable. Too bad!

This multi-dimensional space makes Doc2Vec particularly powerful for tasks like document similarity, where you want to understand how closely related different documents are based on their content.

PROS:

- Contextual and Semantic Understanding: Doc2Vec is very good at understanding the context and semantics of words within documents. It captures the nuanced relationships between words based on their usage in different contexts, offering a richer representation of text data.

- Preservation of Order: Unlike simpler models like TF-IDF, the representation that Doc2Vec makes uses the order of words. This helps in understanding the narrative or the flow of ideas in a document, which can be crucial for text analysis where the specific ordering of words has a lot of impact (think of legal documentation for example, or a medical patient history).

- No outside bias: Doc2Vec is trained only on your data, and therefore cannot include bias from outside of your dataset.

CONS:

- Computational Intensity: Doc2Vec is computationally demanding, particularly with large datasets. After all, it is learning a language from scratch! Keep this in mind when selecting Doc2Vec as feature extractor as this might pose challenges in terms of processing time and resource requirements.

- Hyperparameter Sensitivity: The performance of Doc2Vec can be sensitive to the choice of hyperparameters (the gears and knobs to optimize the learning capacity of the model). While ASReview’s hyperparameters are verified extensively with countless simulations, it is challenging to determine the optimal settings for Doc2Vec that would suit every user’s specific review scenario.

- Low Explainability: The dimensions in the vector representations produced by Doc2Vec are abstract and not easily interpretable. This might be a concern in scientific applications where the of the feature extractor is important.

- Single Language: Doc2Vec will not work for datasets with multiple languages.

What do the vectors look like for Doc2Vec? Here, each column is an abstract concept dimension as extracted from the documents. The vectors will have as many dimensions as set by us via the vector_size hyperparameter. For ASReview’s Doc2Vec model, there are 40 of these dimensions.

SBERT

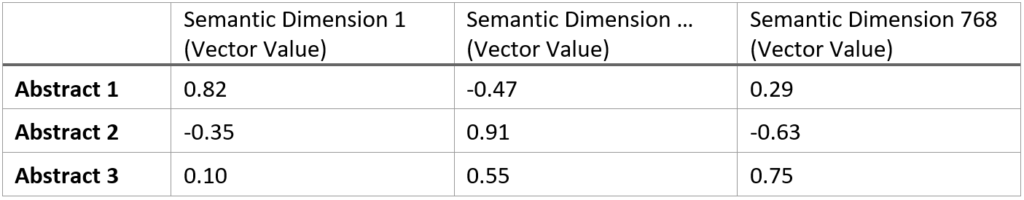

SBERT, short for Sentence-BERT, is a pre-trained BERT model (Bidirectional Encoder Representations from Transformers), that’s specialized in evaluating sentences. It uses a transformer architecture, and yes, this is the same architecture that powers the latest GPT models! SBERT is designed to create embeddings for entire paragraphs, making it very useful for semantic similarity analysis.

Embeddings: Embeddings are the same as vectors which are simply rows of numbers. However, embedding is the conventional term when dealing with transformers. Confusing, we know!

Just like Doc2Vec, SBERT builds a multi-dimensional space where dimensions represent meanings. Sadly, explaining exactly how transformers work is outside of the scope of this blog post. Out of the three feature extractor models we’ve seen, SBERT is the only one that inherently understands sentence context before training. It comes pre-packaged with the ability to read and speak before you’ve used it. Because it can be pre-trained, there are many specialized versions of SBERT trained for specific contexts. Specialized models exist that are trained on only scientific literature, or even models that are trained on many different languages at the same time, enabling it to process multi-language datasets. Examples such as SciBert, LaBSE, or BERTje show the versatility of the BERT model

The pre-trained model that ASReview uses is called “all-mpnet-base-v2”. This model was tested and was found to perform well in ASReview. It was trained on over 1 billion sentences, including but not limited to most of Reddit from 2015 to 2018, Yahoo Answers, Simple Wikipedia, the Semantic Scholar Open Research Corpus, and much more.

All-mpnet-base-v2 maps your abstracts to 768 different dimensions. The Doc2Vec model used in ASReview has only 40 dimensions. However, the dimensions for Doc2Vec are derived from your own dataset, while the dimensions for SBERT are predetermined during its training.

PROS:

- Unchallenged Superior Theoretical Performance: Transformers are widely regarded as having the best theoretical performance among all feature extractors. Its advanced understanding of semantics and context makes it extremely good at interpreting text.

- Multilingual and Domain-Specific Models: SBERT’s versatility is further enhanced by its range of models that cater to different languages and specialized domains, offering tailored solutions for diverse research needs.

CONS:

- Computational and Memory Intensity: Preparing SBERT for use in your project, can be time-consuming. Expect a long preprocessing time and a large RAM requirement. For processing times, check out our paper.

- Complexity and Opacity: Like Doc2Vec, SBERT’s internal mechanisms are complex. This complexity often makes it challenging to decipher why the model makes specific decisions or interpretations.

- Variable Performance Across Datasets: Although SBERT excels in understanding contexts and semantics like no other model, in practice we’ve seen that for ASReview not all datasets benefit from this. Think, is your inclusion criteria only derivable from semantics and contexts? It’s important to consider whether a model like TF-IDF might suffice.

- Potential Bias: Since SBERT is pre-trained on existing data, there’s a risk that it may inherit biases present in its training data. Consider this aspect for your study, as it might impact the objectivity and validity of your findings.

What does the embedding look like for SBERT? Where for Doc2Vec the dimensions are derived from the dataset you trained on, for SBERT the columns are dimensions that were developed and set during the pre-training of this transformer model.

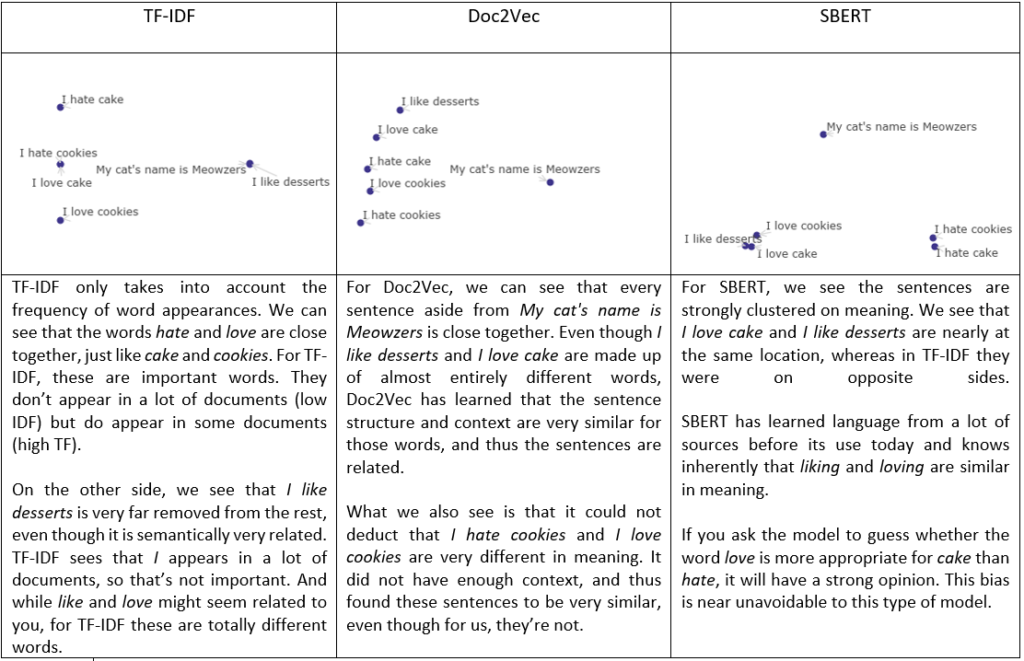

Visually representing the feature extractors

Using our newfound knowledge of feature extractors, we can create embeddings for sentences. For the following demonstration, we use TF-IDF, Doc2Vec, and SBERT to create embeddings for the following sentences:

["I love cookies", "I love cake", "I hate cookies", "I hate cake", "I like desserts", "My cat's name is Meowzers"]

Using a technique called PCA, we can reduce these highly dimensional rows of data into two dimensions. Useful for explaining embeddings, because we can visualize two dimensions as a scatter plot! While simplified, you can interpret points on our scatter plot as relatedness. Points close together are similar, and points far away are dissimilar.

Classifiers

After processing text into a numerical format using feature extractors, the next step for ASReview is classification. Classifiers are algorithms that sort data into categories, based on data found in those categories (labeled data). The key to good classifiers is the ability to accurately find what features make each class unique. In the case of ASReview, there are two classes. Relevant, and Irrelevant.

Normally, in machine learning, you would start your classification after collecting labeled data. Labeled data is data where each data point has its own label. For ASReview, this would be the combination of text and relevance. Using this data, the machine learning algorithm can make decisions on unlabeled data.

However, doing a systematic review introduces a unique challenge. The research you are doing is unique! It is very likely that nobody before you had the idea to select data for this exact research question. Unlike typical machine learning scenarios, where you start with a substantial amount of labeled data, in systematic reviews, at the initial stages, you have limited labeled data (or no labeled data at all!). This is where the concept of active learning becomes important.

Active learning: We have a very nice post on active learning. It will show you, step by step, how the active learning cycle works.

The challenge for models in ASReview lies in being efficient in both the early and later stages of active learning. In the early stages, when labeled data is little, the model needs to be robust enough to make accurate predictions with limited information. This is important because the early predictions significantly boost the performance of the whole screening phase. If the model performs poorly at this stage, it might be that it takes a long time for the active learning cycle to kick into gear.

For each piece of data that you label, the model adapts and refines its predictions. In the later stages of the active learning cycle, the challenge shifts to efficiently combing through the increasingly sparse dataset to identify any remaining relevant articles, which are often few, scattered, and hard to find.

Model Switching: While we aim to have models that excel at both stages, you could choose to switch from one model to another at a certain point. Have the best of both worlds!

Below, we discuss five types of classifiers used in ASReview, providing a basic understanding of how each works.

Naive Bayes Classifier (classifiers.NaiveBayesClassifier : nb)

The Naive Bayes classifier is based on Bayes’ Theorem, which deals with probability. This classifier assumes that the presence of a particular feature in a class is unrelated to the presence of any other feature. It’s like determining the likelihood of enjoying a movie based on liking the genre, regardless of the movie’s director or cast. Naive Bayes is particularly known for its simplicity and speed.

Random Forest Classifier (classifiers.RandomForestClassifier : rf)

Imagine a forest where each tree gives a vote on whether an article is relevant or not. The Random Forest classifier builds multiple decision trees and merges their results to get more accurate and stable predictions. Each tree in the ‘forest’ considers a random subset of features and makes a decision. The majority vote among all trees determines the final classification. This method is effective because it reduces the risk of overfitting (fitting too closely to a particular set of data).

Support Vector Machine Classifier (classifiers.SVMClassifier : svm)

Support Vector Machine (SVM) classifiers find the best boundary that separates different categories of data. Imagine plotting all your data on a graph, and the SVM finds a line (or hyperplane in multiple dimensions) that best divides and categorizes your data points. It’s particularly effective in high-dimensional spaces.

Logistic Regression Classifier (classifiers.LogisticClassifier : logistic)

Despite its name, Logistic Regression is a classification method, not a regression method. It estimates the probability that a given input point belongs to a certain class. It is a method that borrows heavily from the field of statistics.

Neural Network Classifier (classifiers.NN2LayerClassifier : nn-2-layer)

A fully-connected 2-layer dense neural network. In a fully connected neural network, each node in one layer is connected to every node in the next layer. The network is trained by letting it make predictions on the labeled data. If the prediction is wrong, it adjusts the values of the neurons. If the predictions are right, we leave them as it. That way, the neurons naturally get better at predictions. These networks are capable of learning complex patterns but require more data and computational power compared to other classifiers.

Processing times

We all have places to be, tasks to accomplish, and deadlines to meet. Even though ASReview will already save you incredible amounts of time (averages range from 67% to 92%), understanding and managing the processing times you will find in ASReview is worth thinking about.

Feature extractors are unavoidably computationally intensive. It involves analyzing and converting each piece of text into a numerical format that machine learning algorithms can understand. However, the silver lining is that this is a one-time process. Once the text has been converted into embeddings, these numerical representations can be used repeatedly without the need for recalculating them. This initial investment in processing time pays off in the long run as it improves the foundation for all active learning cycles.

Timing: The following indications are based on one of our simulation studies, performed in ASReview, using a dataset containing 46,376 records.

From quickest to slowest embedding calculation time:

- TF-IDF – times ranged from 13 to 23 seconds

- Doc2Vec – times ranged from 15 to 17 minutes

- SBERT – times ranged from 6 to 7 hours

In contrast to feature extractors, classifiers in ASReview are repeatedly calculated, once per active learning cycle. In each cycle of active learning the system builds the classifier from the ground up, and then asks for new predictions. The processing time for classifiers can vary based on the complexity of the algorithm, the size of the dataset, and the computational resources available.

- nb: The fastest model around, ideal for fast computations +- 0.03 sec/cycle

- logistic: Low processing cost, scales well with increasing data size. +- 0.10 sec/cycle

- rf: Medium resource-intensiveness, involves building multiple decision trees. +- 1.5 sec/cycle

- nn-2-layer: Processing-intensive due to its multi-layered architecture. +- 2.4 sec/cycle

- svm: Complexity increases with data size and feature count, leading to higher processing demands for larger datasets. +- 9 sec/cycle

Note how each of these classifiers take under 10 seconds to process. If you, the labeler, take more than this processing time to assess and label an abstract, ASReview will wait for you to finish before restarting the active learning cycle, and the calculation time of the classifier will have no impact on ASReview. Only when you take less time, ASReview will skip an active learning cycle and continue with the previous iteration of the classifier. Even then, this will have little impact on the performance of ASReview.

Future research: Research is validated by research. If you aim to use these times for your own research, please cite our work: “Active learning-based systematic reviewing using switching classification models: the case of the onset, maintenance, and relapse of depressive disorders”. Download BibTex file.

Feature Extractors and Classifier Interactions

Considering what we’ve touched on in this blogpost, your final question might be: so what model is best for me? Ultimately, it is impossible to provide you with a best model combination. Only you know the situation of your research question and environment. Therefore you are the best judge of models!

Moreover, models can have complex interactions. Some classifiers will more efficiently utilize the embeddings from certain feature extractors than others. Some classifiers can only use embeddings with positive numbers (which is why Naïve Bayes only works with TF-IDF). Some classifiers will require huge memories when using certain feature extractors (nn-2-layer in combination with TF-IDF for example). Therefore, we provide you with certain predefined combinations in ASReview, selected and verified by us to work well, based on many, many simulations. Want to verify yourself? Learn how to run simulations!

Simulation performance: Check these figures out if you’re interested in seeing these simulations.

The combinations we selected for you in ASReview are:

Lightweight and Powerful – TF-IDF with Naïve Bayes

Context and Sentiment aware Deep Learning – SBERT with XGBoost

Very powerful at finding the final records – Random Forest with Doc2Vec

Multilingual – LaBSE with logistic regression

Plugins: If you’re interested in more models for ASReview, why not install a model plugin, or even better; create your own?

ASReview offers a wide range of models for conducting systematic reviews, each with its unique strengths and limitations. The choice between feature extractors like TF-IDF, Doc2Vec, and SBERT, and classifiers such as Naïve Bayes, Random Forest, and Logistic Regression, depends on your dataset and research question. Ultimately, your knowledge of the subject matter and the specific demands of your systematic review will allow you to make the right choice.

Thank you for reading, and good luck on your adventure!