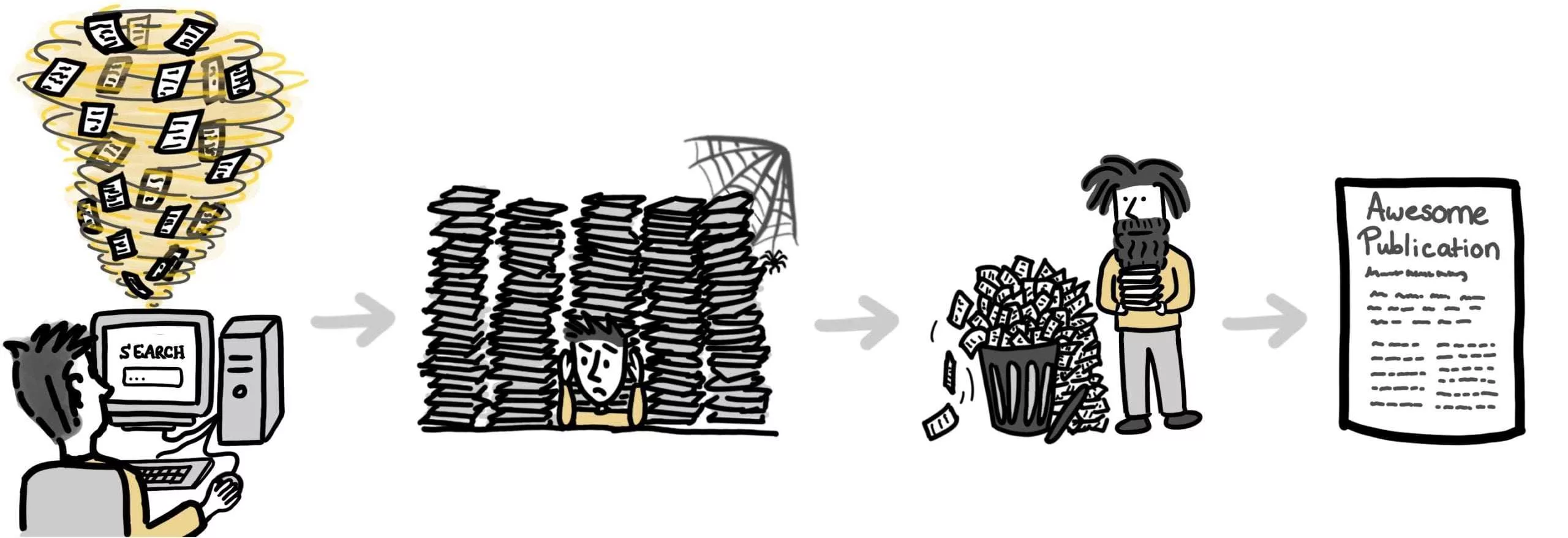

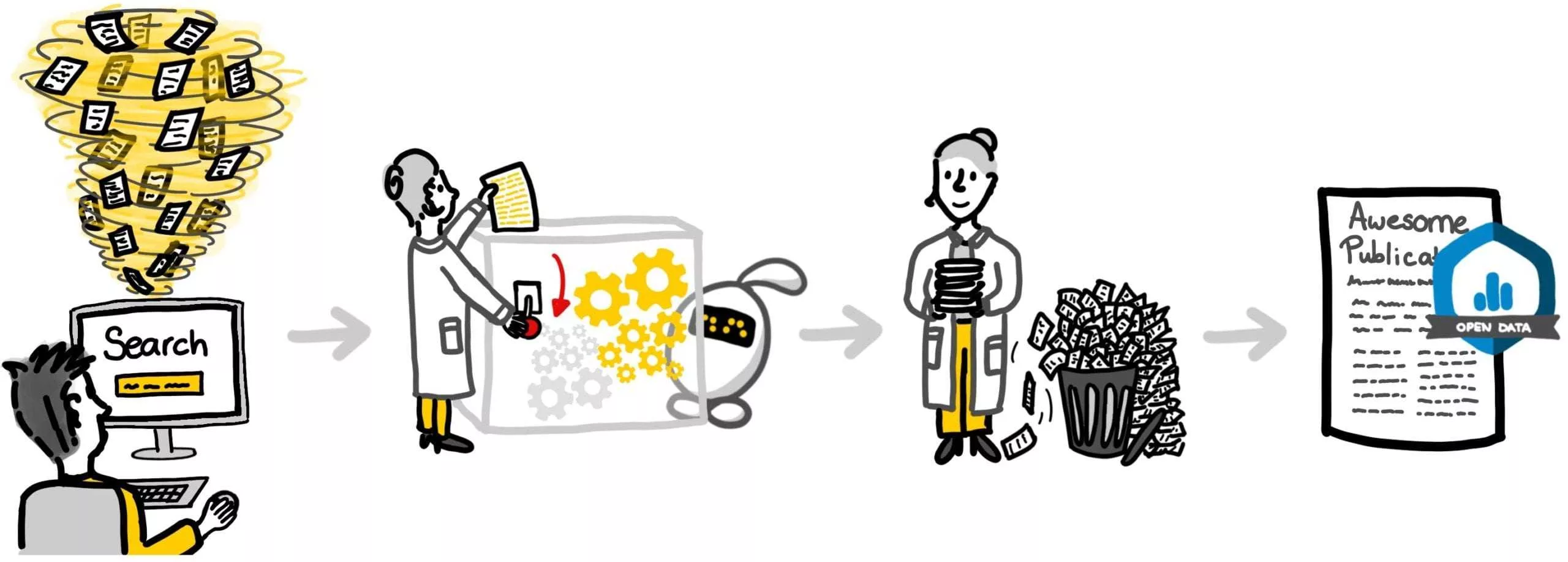

Anyone who goes through the process of screening large amounts of texts, such as scientific abstracts for a systematic review, knows how labor intensive this can be. With the rapidly evolving field of Artificial Intelligence (AI), the large amount of manual work can be reduced or even completely replaced by software using active learning. ASReview enables you to screen more texts than the traditional way of screening in the same amount of time. Which means that you can achieve a higher quality than when you would have used the traditional approach. Before Elas* was there to help you, systematic reviewing was an exhaustive task, often very boring, but not any longer!

*Your Electronic Learning Assistant who comes with ASReview, read more about The principles of Elas.