Get all the relevant texts

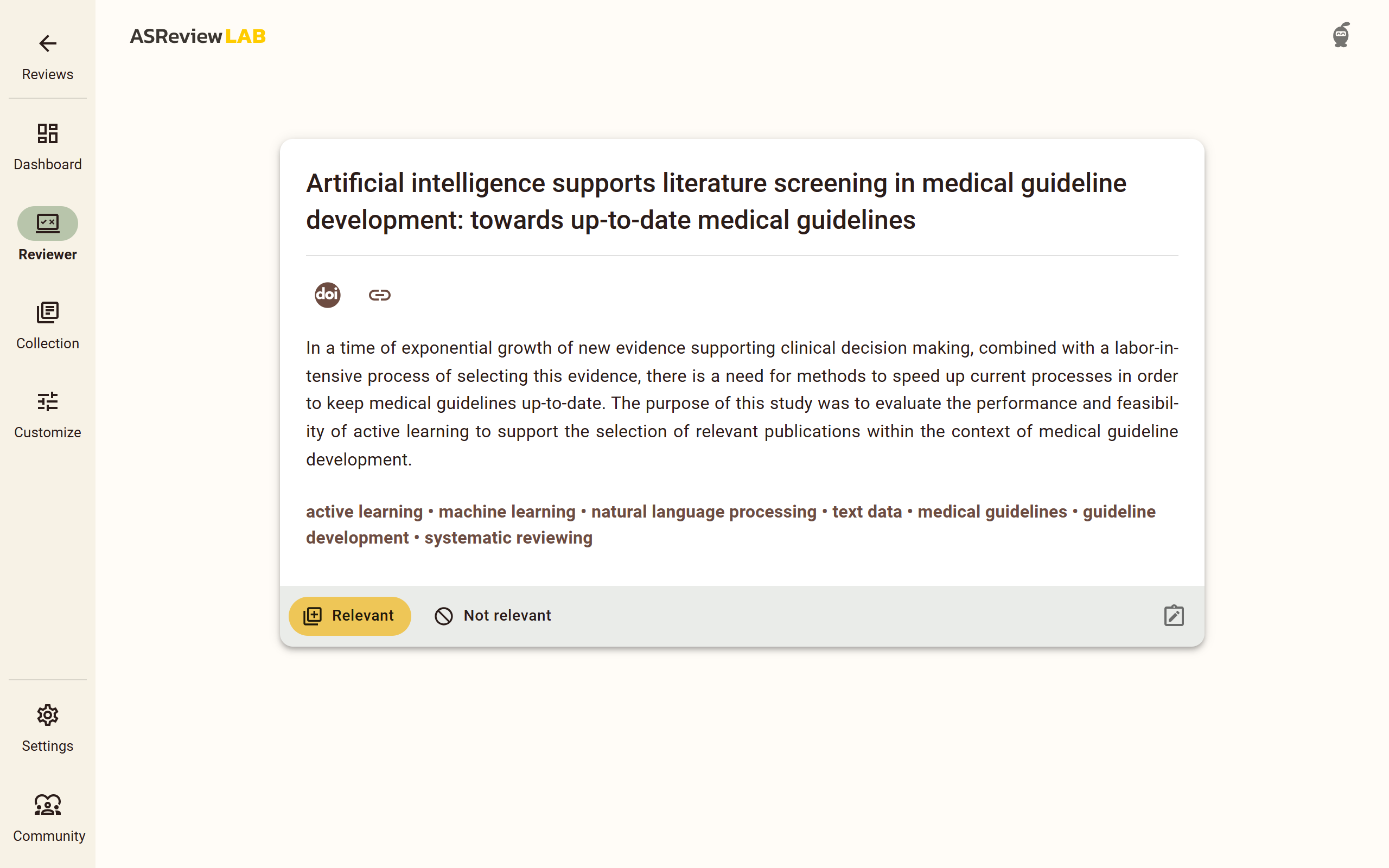

Distraction free

Screen faster

Accelerate your workflow with efficient text screening.

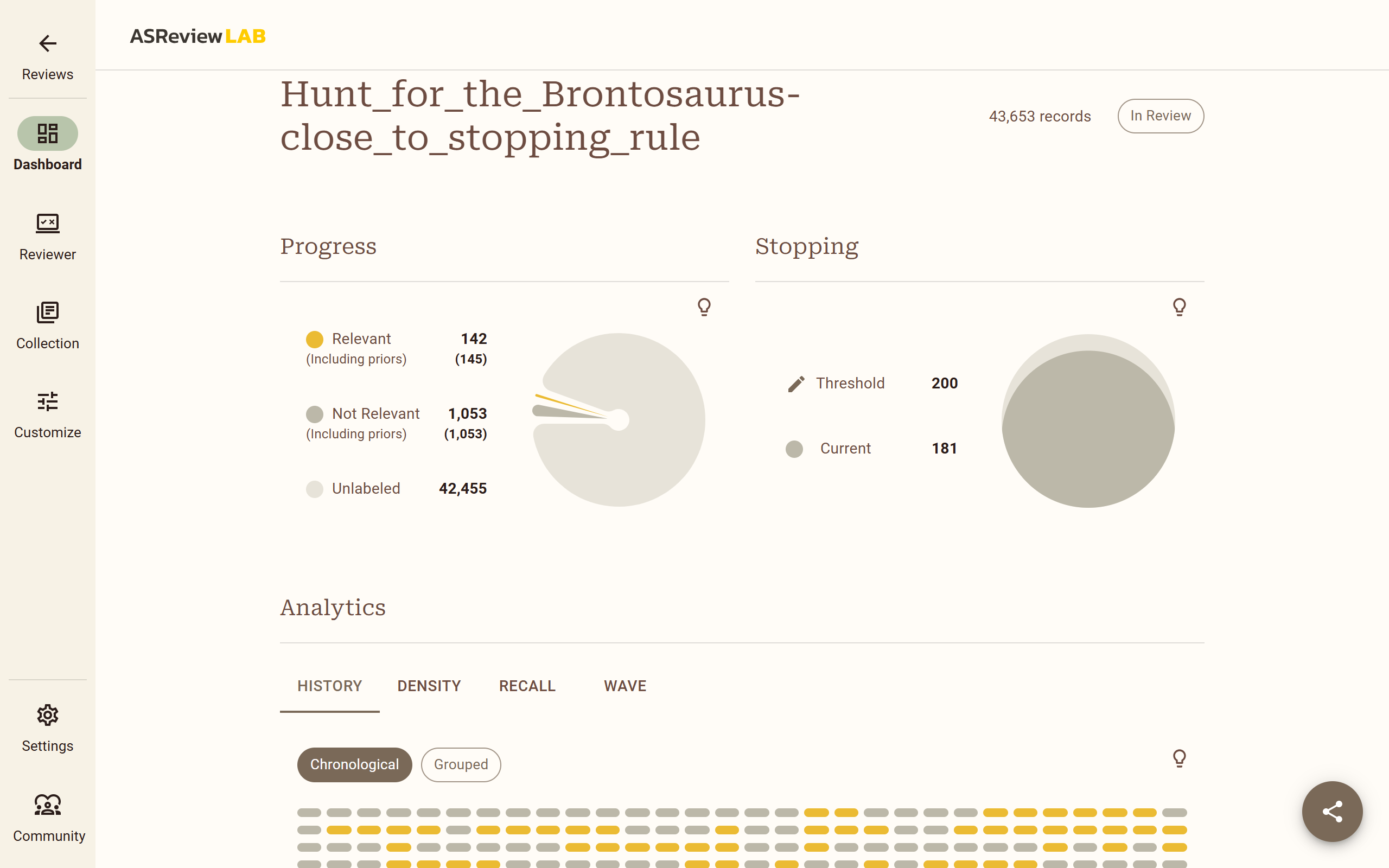

Real-time insights

Instant data analysis delivering actionable insights on the spot.

For all kinds of users

User-friendly at its core

Built to make every interaction a breeze for every user.

Clean interface

A simple and intuitive design for effortless navigation.

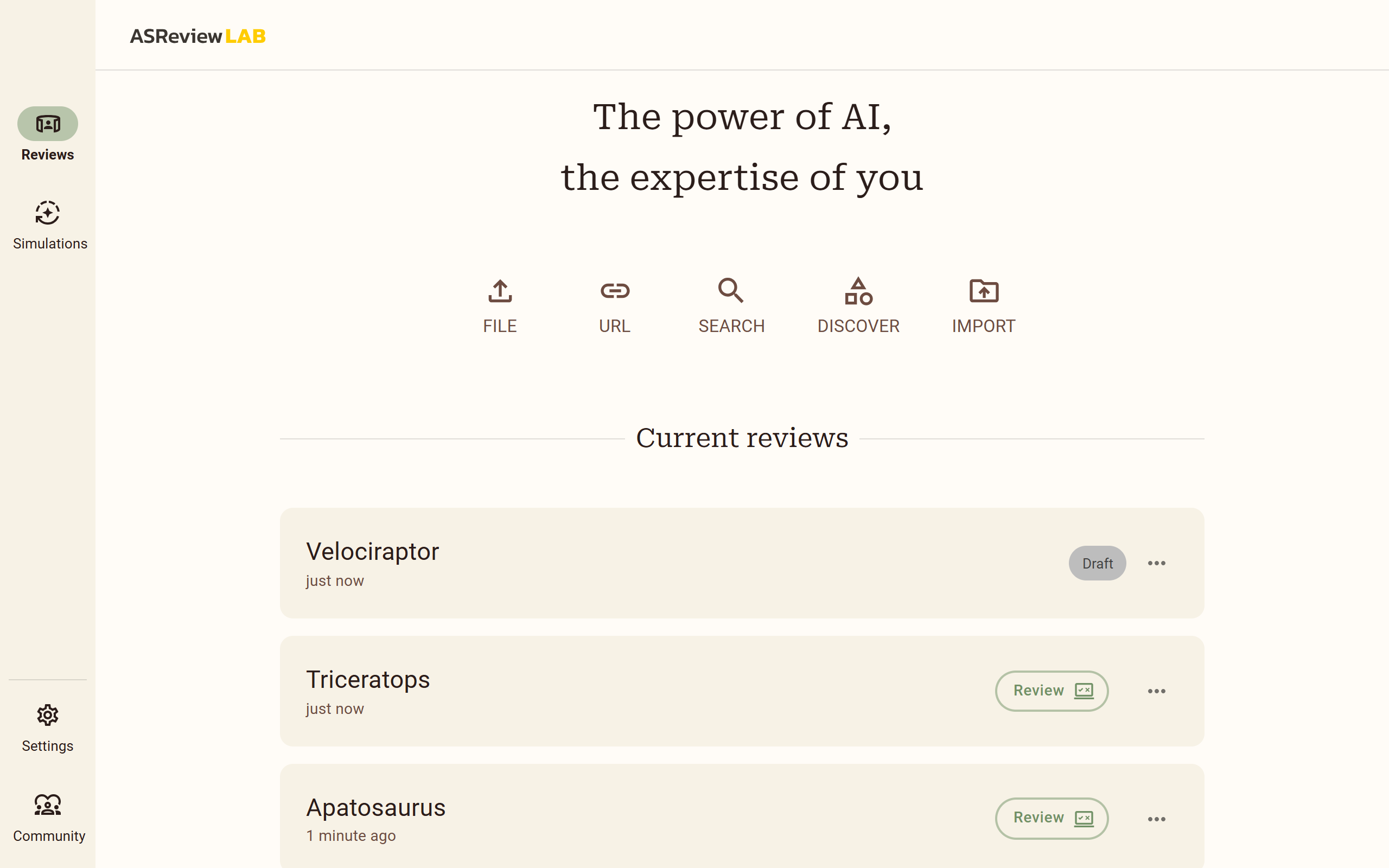

Manage projects

Easily import and export projects to share results, archive, and back up.

Inclusive design

Designed according to material design guidelines concerning all users.

“Having personally used ASReview, I can say it works beyond my wildest dreams, even after starting as a skeptic.”

– Mohammed Madeh Hawas on LinkedIn

Add your own taste

Customizable to all kinds of flavors

Scale to your own preferences regarding the scope of your study.

Quick start

Start your screening process instantly with just a single click.

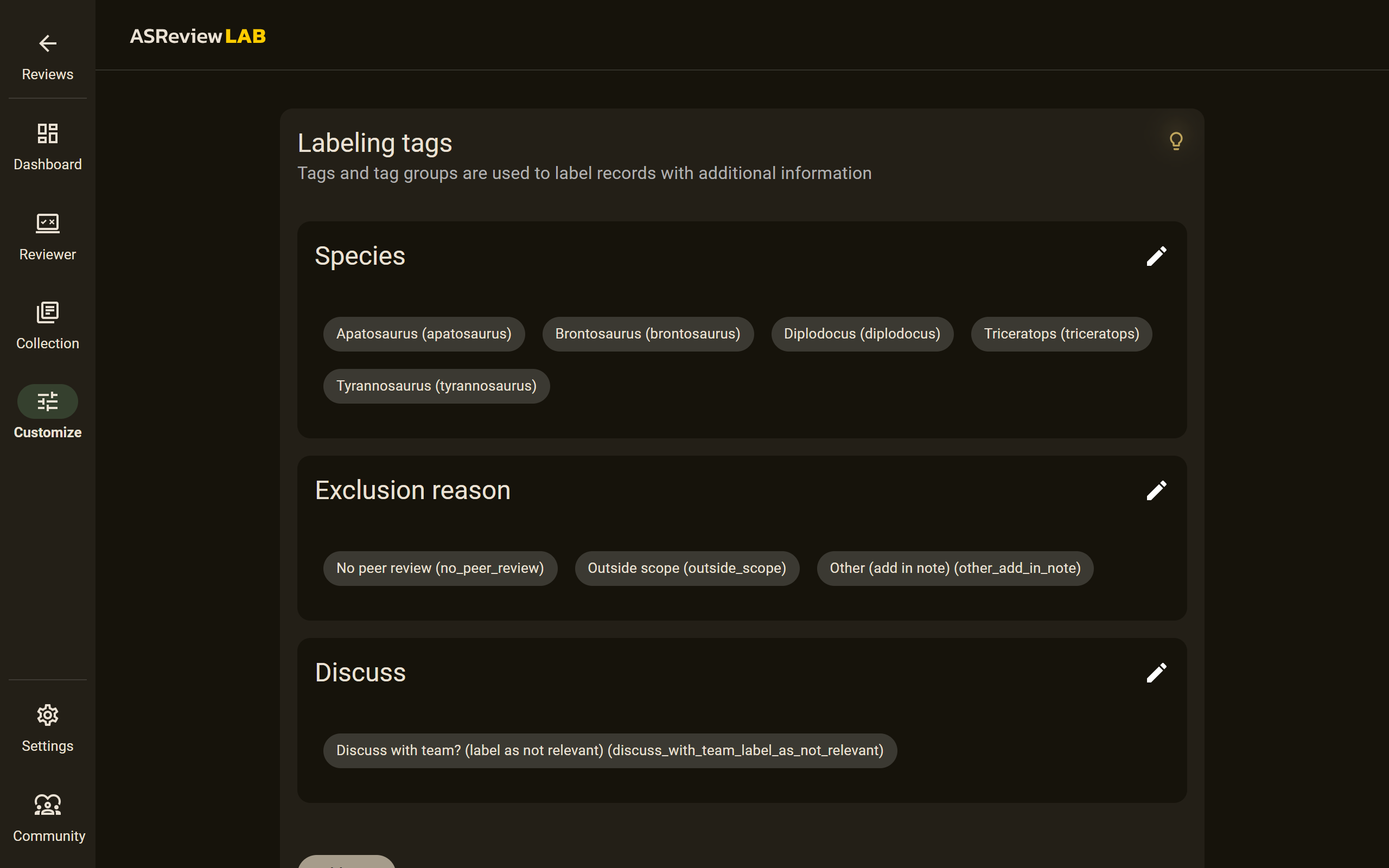

You decide

Enabling tailored options like adding tags and other custom settings.

Dark mode

A comfortable viewing experience with reduced eye strain.

Distraction free reviewing

Hyper focus mode on

Minimize interruptions and stay deeply focused throughout your review process.

Only showing essentials

Displaying only key details to keep your review unbiased and focused.

Quick response

Lightning-fast results to keep your workflow uninterrupted.

Memory game

Search for the built-in game for when you need a distraction.

Use within your existing flow

Software that integrates at every level

Easily integrate with reference management systems, handle diverse file formats, and choose your preferred setup without disrupting your existing flow.

Multiple file formats

Easily import different file types without restrictions.

Feedback on your data

An overview that delivers real-time feedback on all your data essentials.

Circular data flow

Re-import exports into reference software for a circular workflow.

Experience ASReview AI

Find out how our AI helps you with a 95% disappearing act.

Questions & Answers

How user friendly is ASReview for researchers who are executing their first systematic review?

ASReview is designed with a clear interface and step-by-step workflow, making it straightforward for beginners. After installing Python on your local machine, most users find they can start screening within minutes, even with no prior machine learning experience. Institutions also have the option to use ASReview on a dedicated server environment, ensuring an even simpler setup for new users.

How does the screening process in ASReview work, from dataset import to final selection?

You begin by importing your dataset (e.g., a CSV, RIS, or Excel file) into the software. There is a quick-start option so that you can start screening directly after importing your dataset, without configuring the AI components. Alternatively, you can customize the setup, including each AI component. You screen each suggested record (by adding it to your selection), and the model updates continuously based on your feedback. This loop continues until you’ve reviewed enough records to confidently identify all those you need. At the end, you can export your final collection for post-processing.

What happens with my data?

The software processes and saves your project on your own device, and no data is shared. If you decide to use a server-based installation (e.g., at your institution), your data remains within that server’s environment, following your institution’s policies. Your data and labels aren’t shared with us.

How does ASReview safe my process when I want to take a break between screening?

ASReview automatically saves your progress in the background. Whenever you label a record, the software updates your project’s status. If you need to pause screening, you can simply close the interface. The next time you open your project, you’ll pick up exactly where you left off.

What options are available for researchers to customize their screening workflow in ASReview?

Researchers can refine various aspects of the screening process. For example, you can import diverse file types (RIS, Excel, CSV), each of which can include different kinds of text data (e.g., scientific abstracts, newspaper articles). You can also incorporate prior knowledge by adding known relevant records, specify tags to help organize labeling, and even combine these tags with an initial data extraction round. These features let you shape ASReview to fit your specific research needs.

Subscribe to our newsletter!

Stay on top of ASReview’s developments by subscribing to the newsletter.