Insights

Tools for extracting statistical results of performance metrics and advanced data visualizations.

Step inside the simulation lab

See how simulation mode lets you test AI models, evaluate strategies, and speed up your screening process using real data.

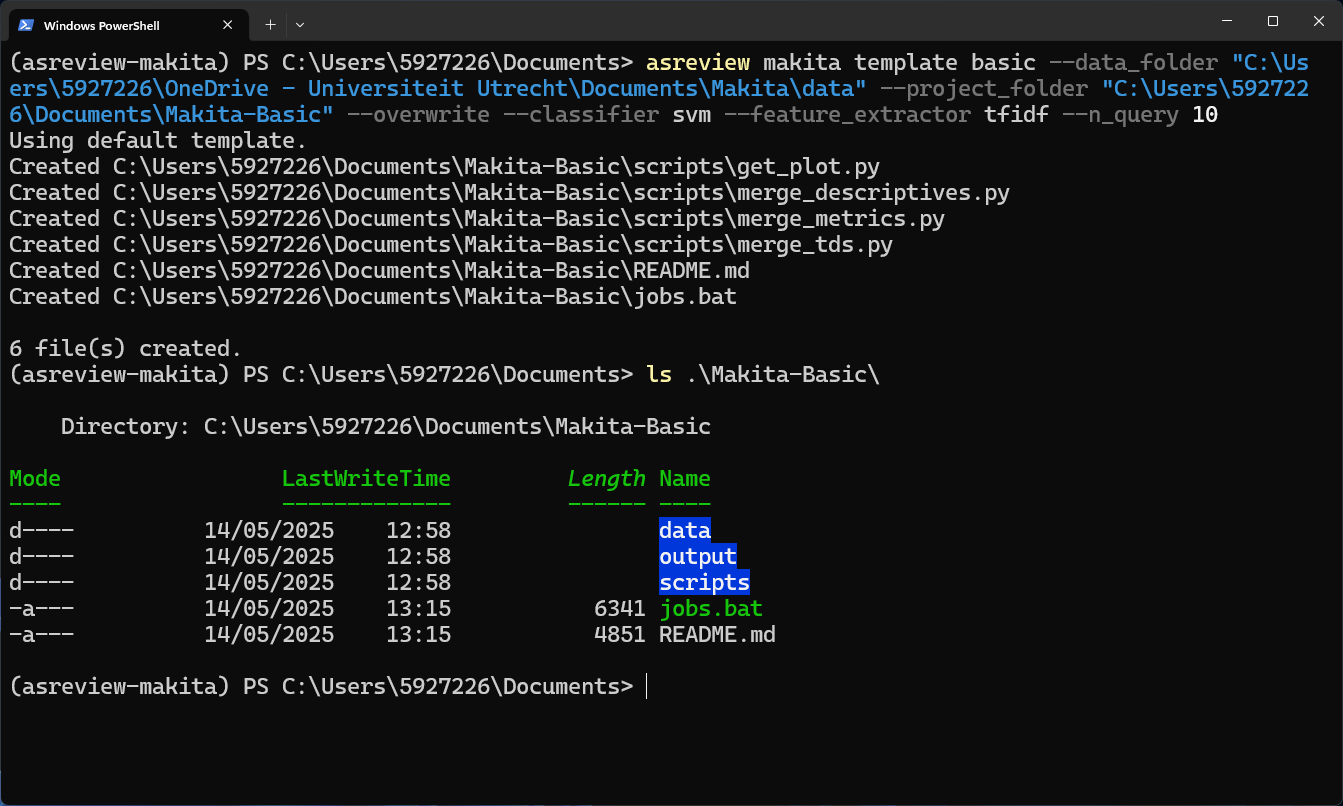

Makita workflow generator

ASReviews‘ Makita (MAKe IT Automatic) is a workflow generator for simulation studies.

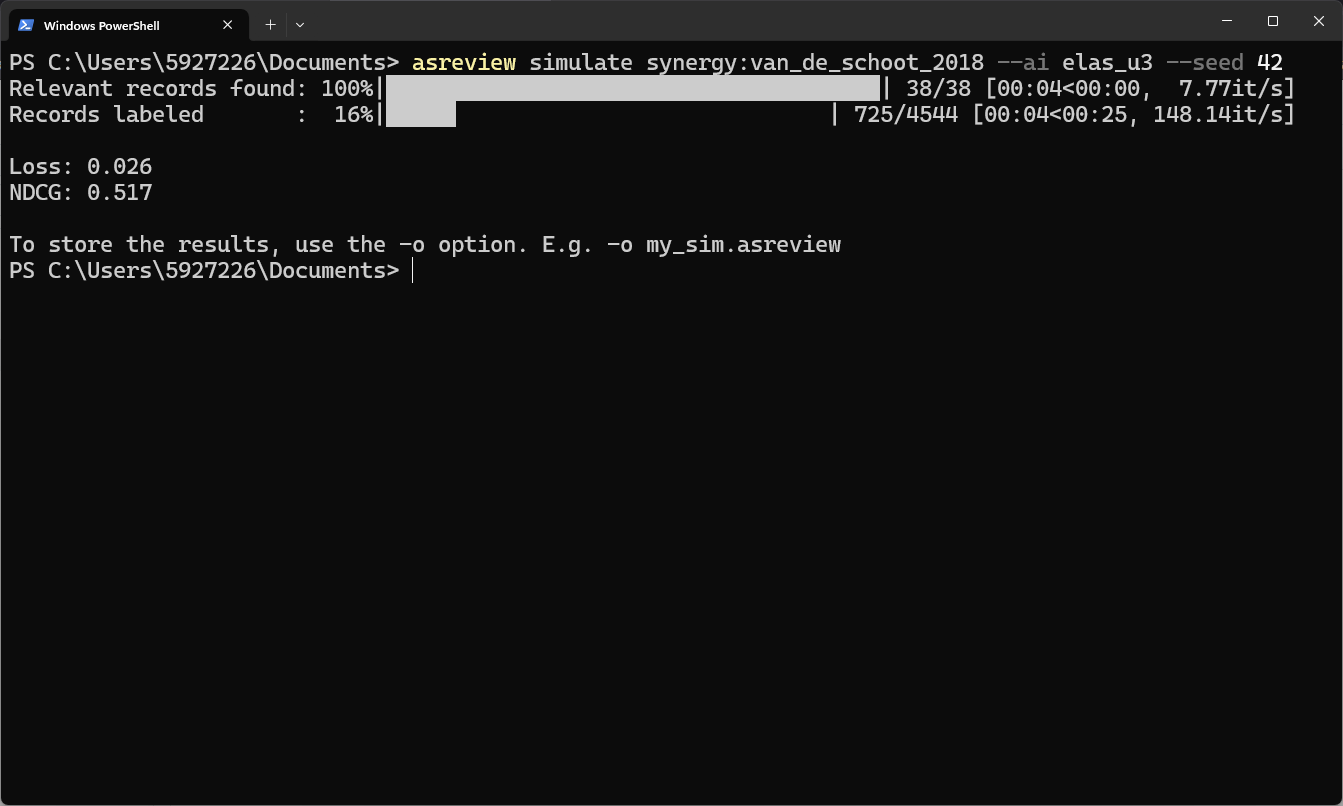

Simulation via Command Line Interface

ASReview LAB comes with a command line interface for simulating the performance of ASReview algorithm.

Simulate with Python API

For more control over the workings of the ASReview software, the ASReview Python API can be used.

Model refinement

Test existing models or add & refine your models in real-time.

Performance

Hard core statistics and data visualizations.

Benchmark

Use the SYNERGY dataset to benchmark your model or test your data.

Researched, validated and confirmed

Read-up on publications made by data scientists across fields researching multiple dimensions of the AI.

Abstract

This paper introduces ASReview Makita, a tool designed to enhance the efficiency and reproducibility of simulation studies in systematic reviews. Makita streamlines the setup of large-scale simulation studies by automating workflow generation, repository preparation, and script execution. It employs Jinja and Python templates to create a structured, reproducible environment, aiding both novice and expert researchers. Makita’s flexibility allows for customization to specific research needs, ensuring a repeatable research process. This tool represents an advancement in the field of systematic review automation, offering a practical solution to the challenges of managing complex simulation studies.

Abstract

Active learning can be used for optimizing and speeding up the screening phase of systematic reviews. Running simulation studies mimicking the screening process can be used to test the performance of different machine-learning models or to study the impact of different training data. This paper presents an architecture design with a multiprocessing computational strategy for running many such simulation studies in parallel, using the ASReview Makita workflow generator and Kubernetes software for deployment with cloud technologies. We provide a technical explanation of the proposed cloud architecture and its usage. In addition to that, we conducted 1140 simulations investigating the computational time using various numbers of CPUs and RAM settings. Our analysis demonstrates the degree to which simulations can be accelerated with multiprocessing computing usage. The parallel computation strategy and the architecture design that was developed in the present paper can contribute to future research with more optimal simulation time and, at the same time, ensure the safe completion of the needed processes.

Abstract

Background

Conducting a systematic review demands a significant amount of effort in screening titles and abstracts. To accelerate this process, various tools that utilize active learning have been proposed. These tools allow the reviewer to interact with machine learning software to identify relevant publications as early as possible. The goal of this study is to gain a comprehensive understanding of active learning models for reducing the workload in systematic reviews through a simulation study.

Methods

The simulation study mimics the process of a human reviewer screening records while interacting with an active learning model. Different active learning models were compared based on four classification techniques (naive Bayes, logistic regression, support vector machines, and random forest) and two feature extraction strategies (TF-IDF and doc2vec). The performance of the models was compared for six systematic review datasets from different research areas. The evaluation of the models was based on the Work Saved over Sampling (WSS) and recall. Additionally, this study introduces two new statistics, Time to Discovery (TD) and Average Time to Discovery (ATD).

Results

The models reduce the number of publications needed to screen by 91.7 to 63.9% while still finding 95% of all relevant records (WSS@95). Recall of the models was defined as the proportion of relevant records found after screening 10% of of all records and ranges from 53.6 to 99.8%. The ATD values range from 1.4% till 11.7%, which indicate the average proportion of labeling decisions the researcher needs to make to detect a relevant record. The ATD values display a similar ranking across the simulations as the recall and WSS values.

Conclusions

Active learning models for screening prioritization demonstrate significant potential for reducing the workload in systematic reviews. The Naive Bayes + TF-IDF model yielded the best results overall. The Average Time to Discovery (ATD) measures performance of active learning models throughout the entire screening process without the need for an arbitrary cut-off point. This makes the ATD a promising metric for comparing the performance of different models across different datasets.

Abstract

SYNERGY is a free and open dataset on study selection in systematic reviews, comprising 169,288 academic works from 26 systematic reviews. Only 2,834 (1.67%) of the academic works in the binary classified dataset are included in the systematic reviews. This makes the SYNERGY dataset a unique dataset for the development of information retrieval algorithms, especially for sparse labels. Due to the many available variables available per record (i.e. titles, abstracts, authors, references, topics), this dataset is useful for researchers in NLP, machine learning, network analysis, and more. In total, the dataset contains 82,668,134 trainable data points. The easiest way to get the SYNERGY dataset is via the synergy-dataset Python package. See https://github.com/asreview/synergy-dataset for all information. |

Abstract

This study advocates for large-scale simulations as the gold standard for assessing active learning models for the prioritization of screening order in systematic reviews. The use of active learning to prioritize potentially relevant records in the screening phase of systematic reviews has seen considerable progress and innovation. This rapid development, however, has highlighted the disparity between the development of these methodologies and their rigorous evaluation, stemming from constraints in simulation size, lack of infrastructure, and the use of too few datasets. In this study, two large-scale simulations evaluate active learning solutions for systematic review screening, involving over 29 thousand simulations and over 150 million data points. These simulations are designed to provide robust empirical evidence of performance. The first study evaluates 13 combinations of known well-performing classification models and feature extraction techniques such as TF-IDF, SVM, Random Forest, and more across high-quality datasets sourced from the SYNERGY dataset. This baseline is then used to expand the scope of the evaluation further in the second study by incorporating a wider array of classification models and feature extractors such as FastText, pretrained transformer models, and XGBoost, for a total of 92 model combinations. The spectrum of performance varies considerably between datasets, models, and screening progression, from marginally better than random reading to near-flawless results. Still, every single model-feature extraction combination outperforms random screening. Results are publicly available for analysis and replication.

Contribute to ASReview

Join our community on GitHub and start your simulations.

Questions & Answers

What types of models and algorithms are supported, and can custom models be integrated?

ASReview offers pre-set model combinations:

ELAS-Ultra in version 2.0 is a combination of TF-IDF (bi-grams) and the Linear Support Vector Classifier from scikit-learn. ELAS-heavy in version 2.0 employs MXBAI, while ELAS-lang employs Multilingual e5 text embeddings, both in combination with SVM and available via the Dory extension. The hyperparameters are tuned on the SYNERY dataset. We continuously improve hyperparameters and update newer releases whenever we can find better parameters, always offering our users the maximum performance boost. We have implemented a versioned naming scheme for our pre-sets to maintain reproducibility while delivering the latest models and parameters. For instance, ELAS-u4 represents the current Ultra-series configuration, and future updates may introduce ELAS-u5 while retaining support for u4. Additionally, to replicate the performance of ASReview v1 in v.2, we provide ELAS-u3, which uses the same model combination as the default in v.1. This naming scheme extends to all pre-set types: ultra, heavy, and lang. The most recent pre-sets are available at: https://github.com/asreview/asreview/blob/main/asreview/models/models.py.

Many other model combinations can be chosen (https://asreview.readthedocs.io/en/latest/reference.html#models), but not all combinations have optimal hyperparameters tuned. Moreover, users can also add new models via the Template Extension for New Models, which provides a straightforward path for incorporating custom or emerging technologies (e.g., additional HuggingFace models). This flexibility ensures that ASReview remains at the cutting edge of machine learning techniques for systematic reviews.

How does ASReview utilize machine learning to optimize the systematic review process?

The information retrieval (IR) task involves finding all relevant records by querying specific records proposed by a learner. In a classical active learning set-up, an AI agent makes screening recommendations, and a human-in-the-loop (the “oracle”) validates them to optimize the model’s performance. In recent literature, Active Learning To Rank (ALTR) has been proposed, in which an oracle, a human expert, interactively queries the active learner (also called AI Agent or ranking agent) and ranks the unlabeled data. Researcher-in-the-loop active learning is a sub-case of ALTR, where the oracle (human screener) iteratively requests the highest-ranked records with the goal of retrieving the relevant ones as quickly as possible.

What are the data requirements for ASReview, and how should datasets be prepared?

ASReview supports datasets of textual records, such as titles and texts from scientific papers, news articles, or policy reports, acquired through a systematic search. Typically, only a small portion of these records will be relevant to our users, so the primary challenge is effectively identifying those records.

Data can be natively presented to ASReview in two main formats. First, tabular datasets are accepted in CSV, TSV, or XLSX formats. If some records are already labeled, a column named “included” or “label” should indicate this using 1 for relevant and 0 for irrelevant, while unlabeled entries remain blank. Second, RIS files exported from digital libraries (e.g., IEEE Xplore, Scopus) or citation managers (e.g., Mendeley, RefWorks, Zotero, EndNote) can also be used. If some records are already labeled, they should be stored in the “N1” field with tags “ASReview_relevant,” “ASReview_irrelevant,” or “ASReview_not_seen,” enabling re-import and continuation of ongoing screening.

How does ASReview ensure transparency and reproducibility in active learning-based screening?

ASReview maintains a project file that captures essential set-up information, including the learner’s hyperparameters of the AI agent, the feature matrix (x)(ces), and any initially labeled records. This ensures that the starting configuration can be fully reconstructed.

What are the best practices for evaluating model performance?

There are many different metrics one can use to evaluate and compare the performance of different model-dataset combinations. Several are available in the Insights package (e.g., recall, WSS@95, ATD), and others (e.g., Loss) can be calculated on the fly.

Subscribe to our newsletter!

Stay on top of ASReview’s developments by subscribing to the newsletter.